What Redis deployment do you need ?

Redis is an in-memory database that I really love. It’s one of the rare technologies that make both devs and ops happy. For those who don't know Redis already, here is a small introduction.

There are four main topologies of Redis, and each one has and uses different and incompatible features. Therefore, you need to understand all the trade-offs before choosing one.

Here we go:

Redis Standalone

The old classic. One big bag of RAM. Scale vertically, easy as pie, no availability, no resilience.

Pros:

- This is the most basic setup you can think of.

Cons:

- No resilience

- Scale only vertically (using bigger hardware for bigger workloads)

Remediations:

- As the Redis protocol is simple, you can use an external solution, a proxy like Twemproxy, to do the replication to other nodes (for resilience) and shard the keys yourself (for horizontal scalability)

- Use the built-in replicated Redis or Redis-Sentinel or Redis-Cluster

Redis replicated

There is a master and there are replicas. The master pushes data to replicas. Replicas don’t talk between themselves.

They are Read-Only and will tell you so if you try to do a SET on them (or any Write operation for what it’s worth).

Pros:

- Really easy to setup

- You always have a hot snapshot of your data

- It's an easy Disaster Recovery Plan (DRP) with Master/Stand-By

Cons:

- Write performance is bounded by the master

- In order to achieve resilience, you need manual operations (changing the master Redis manually and restarting the clients)

- The replicas are not used to their full potential

- The Redis client needs to know which Redis to ask for which operation (or you can just ask the master every time)

Remediations:

- Smart clients (eg: Lettuce) able to ask by themselves the right Redis for the right operation

- You can setup a sharding with an external solution like Twemproxy for horizontal scalability

This setup is so easy, you have no excuse to deploy in production a Redis instance without replication.

It's a cheap way to keep your service running when things have gone awry, with just a bit of manual operations. If you are a little to medium-sized organization and it's your first Redis deployment, this may be the best trade-off for you.

Redis Sentinel

Ok, a bit of history: Antirez, the Redis author, started to work on a concept for Redis clustering some years ago. It turns out, distributed systems are complex and the right tradeoff is hard to reach.

There are two problems with standalone Redis: Resilience, and Scaling. Ok, how about we only solve one?

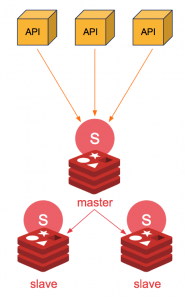

Redis-sentinel is the solution to resilience, and it’s quite brilliant. It’s a monitoring system on a Redis replicated system (so, a master and N replicas) which aims to answer two questions:

- Who is the manager in charge in this cluster? (the current master)

- Oh damn! We lost contact with the current master, who will take its place?

(actually, it also takes care of reconfiguring the Redis instances on the fly so that the newly promoted master actually knows it can accept Write operations)

But, hey, and if we loose the Sentinel? We want resilience after all, so the Sentinels should also be resilient!

That’s why the Sentinels should always be used as a cluster. They have a built-in election system to track who is the master Sentinel (which can elect the master Redis when the current master is down). As it’s a quorum system, you need 3 nodes to support losing 1 (you need the majority of the cluster to be up for a successful election).

Recap: you have 3 nodes with Redis-Sentinel in a cluster, and 2 or more Redis in replicated mode.

You are smart, so you most likely have 3 machines, each hosting both a Redis instance and its Sentinel. This is my preferred topology. You need to deploy your sentinels first, and then you deploy your Redis instances, they register, and it works.

Pros:

- Redis-Sentinel is builtin in the Redis binary so it’s easy to setup

- Good trade-off in complexity

- Very stable. One of the few no-hassle distributed systems

- Automatic resilience

- It doesn't eat your RAM or CPU

Cons:

- Still a big step up compared to Redis or even Redis replicated alone

- The client MUST support Redis-Sentinel. Half the magic is in the client

- Not trivial to upgrade a “Twemproxy + Replica” cluster to a Redis-Sentinel cluster, as you need to orchestrate the upgrade with your consumers

- Doesn’t solve scale issues

- You may need to configure your firewall to open the flow between the Sentinels

Remediations:

- You can soften the operational complexity and resource usage by monitoring multiple redis clusters with 1 sentinel cluster. I am currently doing just that: dozen of api-dedicated mini-Redis clusters (100Mo LRU cache) monitored by 1 Sentinel cluster

- You can soften the client migration path to the Redis-Sentinel protocol with a little trick: you can have old clients that only query the master Redis and manually update the target Redis when Sentinels elect a new Redis master. It’s painful, but feasible.

This setup is less easy to put in place. Don’t do it if you are not ready to pay the price. Sadly, Twemproxy is not easier so if you need automated Resilience, this is still your best bet.

Redis Cluster

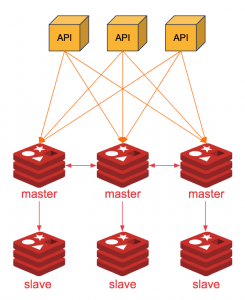

This is the Big Gun. The One we waited for so long. It aims to help large deployments, when you need both resilience AND scaling (up to 1000 nodes).

It’s a multi-master architecture: the data is partitioned (sharded) into 16k buckets, each bucket with an assigned master in the cluster, and typically replicated twice. It’s the same design as Kafka or CouchBase. When you SET mykey myvalue, to a Redis-Cluster node:

- the hash of mykey is computed, this gives us the bucket number

- if the current Redis node is the master of this bucket, it accepts the operation with OK

- if it’s not the master it answers MOVED with a destination node, then you must connect to this node, repeat your operation, and wait for the OK to complete your SET

There is a catch, though. A node is either a master node (it owns a subset of the 16k buckets, monitors other nodes of the cluster, and votes for a new master if one fails), or a replica node (exactly like a Redis replicated, but specific for exactly one master).

Thus, for a reliable cluster, you will need at least 6 nodes. 3 or more master nodes and 3 or more replicas nodes. You need to have the same number of replicas per master if you want to have homogeneous resiliency.

In practice, if you have a Write-heavy workload, keep 2 replicas per master and increase the master count, and if you have a Read-heavy workload increase the replicas per master and use a smart client that can load-balance between replicas (like lettuce).

Pros:

- Incredible documentation, see here. The Redis author, Antirez, is great at explaining his work

- It scales, it heals, it works

- It’s bundled in the redis binary

Cons:

- You need at least 6 nodes

- It works in a full-mesh fashion. Expect lots and lots of east-west traffic (intra-datacenter)

- Few clients support Redis Cluster. Check explicit support for your programming language

- It is definitely not compatible with the other Redis configurations. Neither standalone, nor replicated, nor sentinel Redis

- You won’t be able to upgrade from another typology, nor downgrade to another. It’s a one-way ticket

Remediations:

- None

Wrapping it up

- When it's so easy to have replicas, it's really a must have for any production deployment. Don't deploy Redis standalone.

- Redis Sentinel is simple and a very good trade-off when you need resilience

- Clients can't change easily between typologies so take your time before deploying one

So there you have it. You may have variations on these deployments but the meat will stay the same. I hope your future Redis deployment will at least be replicated!

Thanks to Sebastian Caceres, Florent Jaby, and Simon Denel for the review.