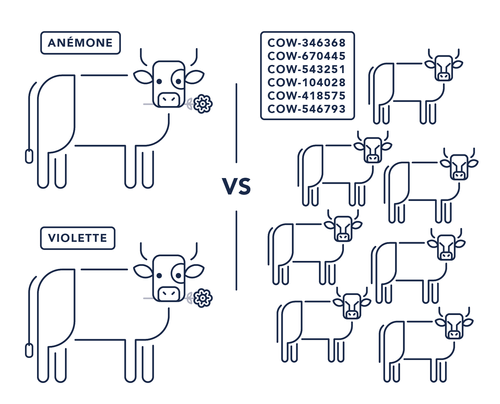

"pet vs. cattle", from server craftsman to software craftsman

The evolution of Ops follows a path that we observe regularly in our interventions. It is through this fable that we will see the 4 stages that mark this path paved with pitfalls. Let's see how an Ops proceeds concretely to carry out the operation "fix_mysql" which consists in changing the configuration of MySQL on production servers.

The first age : the server craftsman

Many system administrators have an almost affective relationship with their machines. Under the name "machines", you can alternatively talk about physical servers, virtual machines (VMs), storage, network equipment.

It is comforting to know one's machines one by one, to do a tour for each of them in the morning (ssh, consultation of the munin dashboard machine by machine) as a doctor does the tour of the rooms in a hospital. When a machine falls ill, we are at its bedside, we practice heroic care, we meet in committee to examine the treatment to be prescribed. In short, we make a fuss of it.

Organisations that are centered on this behavior are detectable in their propensity to personify the machines, to set up meetings whose objective is to clean up the server "daniela", to carry out the version upgrade of the DBMS of acme-dc1 -lnx-db001-prd, to plan a weekend for the upgrade of the machine kernel of site B. A whole poem.

Samples of commands for fix_mysql, first age version:

# Connecting to the first server $ ssh amz@acme-dc1-lnx-db001-prd # Checking ongoing processes amz@acme-dc1-lnx-db001-prd ~> ps # Checking disk space amz@acme-dc1-lnx-db001-prd ~> df -h # Editing mysql configuration file amz@acme-dc1-lnx-db001-prd ~> sudo vim /etc/mysql/my.cnf # Restarting the mysql service amz@acme-dc1-lnx-db001-prd ~> sudo systemctl restart mysqld # Checking logs amz@acme-dc1-lnx-db001-prd ~> sudo tail -F /var/log/mysql.log # Disconnecting from the first server amz@acme-dc1-lnx-db001-prd ~> logout # Connecting to the second server... $ ssh amz@acme-dc1-lnx-db002-prd amz@acme-dc1-lnx-db002-prd ~> sudo vim /etc/mysql/my.cnf amz@acme-dc1-lnx-db002-prd ~> sudo systemctl restart mysqld amz@acme-dc1-lnx-db002-prd ~> sudo tail -F /var/log/mysql.log amz@acme-dc1-lnx-db002-prd ~> logout # Connecting to the third server (still 17 to go)... $ ssh amz@acme-dc1-lnx-db003-prd amz@acme-dc1-lnx-db003-prd ~> sudo vim /etc/mysql/my.cnf amz@acme-dc1-lnx-db002-prd ~> sudo systemctl restart mysqld amz@acme-dc1-lnx-db003-prd ~> sudo tail -F /var/log/mysql.log amz@acme-dc1-lnx-db003-prd ~> logout # a long time later...

Behind these cabalistic signs, a very manual, laborious and repetitive work on each of the servers is hiding. In many steps errors can slip and lead to dysfunctional and / or heterogeneous environments.

#### First age : comfort level      | |

| #### Why it works<br><br>My servers don't need to be frequently upgraded<br><br>A few applications to manage<br><br>A few deployments<br><br>Small number of machines | #### Why it does not work<br><br>New versions of applications must be deployed more than once a month<br><br>I manage more than X machines<br><br>I manage more than Y applications<br><br>I go on holidays or I fell sick |

The second age : virtualisation rocks the boat

With the advent of virtualization, the situation changes. We tend to use many VMs. Heaps. So that knowing them all by their name becomes almost impossible. So how do you maintain an ability to deal with so many machines with the same love and dedication?

In addition, VMs are managed as physical servers (created at the beginning of the project, deleted at the end), cleaning phases are not frequent. And we must admit it, removal of VMs is clearly not a priority. We will do this when we have time or when the hypervisor is full.

The "Pet" approach is jeopardised. Systematic tasks performed by hundreds appear meaningless, in addition to being frankly tedious. You can not hold such a rhythm durably without risking a complete overheat.

You then launch various actions: creation of snapshots / clones to duplicate the machines, you only swear by your golden-image. For settings specific to each machine, it's still hell.

Samples of commands for fix_mysql, second age version:

# Nice shell loop $ machines=”001:16M 002:32M 003:16M 004:32M 005:32M ...” # Action on all servers in one pass, I have tested on a server, it seems to work... $ for m in $machines; do IFS=: read machine_number mem <<< $m ssh -tt amz@acme-dc1-lnx-db$machine_number-prd -- “sudo sed -ie 's/.*key_buffer_size.*/key_buffer_size=$mem/' /etc/mysql/my.cnf && sudo systemctl restart mysqld” done

In this example, you start writing automation code to switch to all machines. Little control, high risk of mistakes, you like to live dangerously ... But after all, your scripts and your one-liners, you know them by heart, no matter if nobody understands anything.

#### Second age : comfort level      | |

| #### Why it works<br><br>My images ensure that what worked yesterday will continue to work tomorrow | #### Why it does not work<br><br>Sometimes I forget to backport the patch X from prod to an image.<br><br>There are so many images that we always end up taking the last one, and we hope that it is the most up to date.<br><br>Deploying without downtime is hard: my pictures take a long time to boot, you have to keep a close eye on them, and I have to update the loadbalancers configuration manually.<br><br>My shells are sometimes a bit unstable and complicated to maintain |

The third age : infrastructure as code to the rescue

Automation is now a must. Following the misunderstanding of your colleagues to understand your home scripts, you start working with a tool, a real one.

There are now so many automation tools that it may even be difficult to choose, but that is not the question. Thanks to them, facilities / configurations / deployments are greatly facilitated, on a very large scale. You feel ineluctably slipping into the "Cattle" approach. This industrial and bestial management of heaps of machines, all identical, without personality gives you more the impression of managing a farm of 1 000 cows than a small flock of 20 ewes on the foothills of this small village in the Australian bush.

There are some teams that go as far as regularly destroying and rebuilding VMs, just for the sake of verifying that it is possible and that it would even allow to validate a DRP strategy. Perfectly scandalous. A machine is made to last.

And what about a practice as wild as inhuman as the blue-green deployment? This involves performing version upgrades by creating VMs with the new version of the application, making a toggle on the load-balancer before destroying the old VMs. Are you kidding?

We have to face the obvious. This approach is devilishly effective. Manual and tedious tasks are reduced, part of the slips due to heterogeneous configurations disappear. Some time is saved and it is possible to easily manage hundreds or even thousands of machines. The automaton becomes your armed wing, it is he who, by construction, laboriously performs all the repetitive operations that you have coded. A small snag however, when you ask him to do a stupid thing, he does it with obedience, on 1000 machines at a time ...

- Am I doing a least good job?

- Are my scripts, cookbooks, playbooks, modules and roles slowly replacing me?

- How to continue practicing my craft and my love of job well done in such a context where some VM live only a few hours or even minutes?

- Have I become insensitive to all the maltreatment of these defenseless VMs?

These questions begin to accumulate in your head. You also become the owner of a small asset of code that describes your infrastructure and which begins to accumulate discreetly ...

fix_mysql, third age version:

# Editing Ansible template file for MySQL configuration $ vim roles/mysql/templates/my.cnf # Editing Ansible MySQL role $ vim roles/mysql/tasks/main.yml # Launch in dry-run mode to list modifications to apply on all database servers in production $ ansible-playbook -i inv/prod -l db-servers configure.yml --check --diff # Real launch on the platform on all database servers in production $ ansible-playbook -i inv/prod -l db-servers configure.yml --diff -vvv # Ouch, after a manual check, we need to pass on all servers to fix our mistake... $ vim roles/mysql/templates/my.cnf # Launch on the platform on all database servers in production $ ansible-playbook -i inv/prod -l db-servers configure.yml --diff -vvv # Phew, it's fixed, no one has seen it...

Dans cet exemple, un outil (Ansible, un choix parmi tant d’autres) permet de déployer un changement de configuration sur tous les serveurs de base de données. Comme il n’y a pas de garde-fou, si on fait une coquille, celle-ci se déploie sagement sur toutes les machines concernées. Reste alors à vite repasser derrière pour réparer…

In this example, a tool (Ansible, a choice among many others) allows to deploy a configuration change on all database servers. Since there is no guardrail, if you make a mistake, it spreads out wisely on all the machines concerned. And you have to quickly pass over all of them to repair ...

#### Third age : comfort level      | |

| #### Why it works<br><br>As with the golden images: what worked yesterday will continue to function tomorrow.<br><br>A rolling update is equivalent to setting values in my code.<br><br>The code represents "the truth" of what is supposed to happen in production, everyone can refer to it to make a decision. | #### Why it does not work<br><br>I do not write tests: I have code but I do not know if it works at a given moment.<br><br>I do not trust the idempotence of my code and therefore I can not apply it in production to fix it.<br><br>I modify the machines behind my automaton's back manually and this breaks everything when it runs again |

The fourth age : the software craftsman

Concernant la gestion du code que vous donnez à votre automate, une transformation s’opère et elle pourrait être la voie du salut. En allant voir ce qu’il se fait du côté des développeurs d’applications, vous vous rendez compte que c’est un nouveau monde parfois mal-connu qui s’ouvre à vous.

Regarding the code management you feed your automaton, a transformation takes place and it could be the way of salvation. It is done by looking at what application developers do, you realize that it is a new world, sometimes not well known, that opens up to you.

- Practices to improve code: code review, peer-programming

- Code versioned in a tool like Git which allows not only to store and to version its code, but also to share it and to ensure its traceability. Besides, where your code was only visible by some hand-picked sysadmins, you deign to set read-only rights to everyone. Passwords are not stored there, there is no risk. It might even give ideas to others, or even better, explain what you're doing on the platforms. A little more visibility can not hurt.

- Using tools to write readable code: linter to check its syntax and compliance with writing standards. It's always better if everyone writes code the same way.

Developers have implemented a whole ecosystem of tools and practices to do their job.

By talking to them, you realize that they have done this because they aspire to something that resonates deliciously within you. They like to do quality work, make their code beautiful, expressive, maintainable, in short, treat it with the greatest care... They even have a term for it: software craftsmanship.

Fourth age version of fix_mysql:

Switching on master branch to checkout the latest code version

$ git checkout master # Updating with the main repository $ git pull --rebase # Creating branch for the fix $ git checkout -b amz # Recreating a dev platform from scratch $ ansible-playbook -i inv/amz vm-reinit.yml # Launch on a dev platform $ ansible-playbook -i inv/amz -l db-servers configure.yml --diff -vvv # Editing the MySQL serverspec test to check the new expected behavior $ vim tests/spec/nodetypes/db/mysql_spec.rb # Checking the test has not passed $ ENV=amz rake -f tests/Rakefile spec:mysql # Editing the ansible template file for MySQL configuration $ vim roles/mysql/templates/my.cnf.j2 # Editing the ansible MySQL role $ vim roles/mysql/tasks/main.yml # Checking the ansible MySQL role syntax $ ansible-lint roles/mysql [ANSIBLE0012] Commands should not change things if nothing needs doing /home/amz/projets/trucs/infra-as-code/roles/mysql/tasks/main.yml:17 Task/Handler: Ch{mod,own} file # Ouch, I've made a mistake, ansible-lint has caught it before I launch the command $ vim roles/mysql/tasks/main.yml # Checking the ansible MySQL role $ ansible-lint roles/mysql # This time it works, launching on a dev platform in dry-run mode $ ansible-playbook -i inv/amz -l db-servers configure.yml --check --diff # Launching on a dev platform $ ansible-playbook -i inv/amz -l db-servers configure.yml --diff -vvv # Launching tests that should all pass $ ENV=amz rake -f tests/Rakefile spec:mysql # Checking modified files $ git status # Adding modified files to the next Git commit $ git add tests/spec/nodetypes/db/mysql_spec.rb roles/mysql/templates/my.cnf.j2 roles/mysql/tasks/main.yml # Git commit with item reference enclosed $ git commit -m “#678 ajout de la gestion des key_buffer_size” # Git push in the branch to ask for a merge-request + pair review # The CI/CD platform will deal with it automatically $ git push origin amz # Purging the temporary dev platform $ ansible-playbook -i inv/amz vm-destroy.yml

In this example, the Ansible code is supported by a code best practices analyzer (ansible-lint) and tests (in serverspec) that are written (if possible) before the implementation. The ability to have disposable environments on demand (via test-kitchen or any other solution) makes it possible to validate the changes on an ante-prod environment. The branch and code-review strategy contributes to the overall quality and to the share of the code repository. This is especially the occasion to have the code and the parameterization read again to a newbie on the project or a DBA who will check the settings. A continuous integration platform re-runs all the tests and a promotion mechanism (manual or automatic) rolls out the changes into production.

#### Fourth age : comfort level      | |

| #### Why it works<br><br>There are several testing levels that contribute to the code quality.<br><br>I make the most of the versioning provided by the SCM to version my whole infrastructure. | #### Why it does not work<br><br>Stop it now, it works. |

Conclusion : from craftsman to craftsman, the circle is complete

Therefore,for the sysadmins / devops, the deployment tool becomes a kind of shepherd dog. It is ultimately the one who becomes the new pet, the one you really need and take the greatest care of. The one whose every line of code is written with love, with all the possible quality since he is the one who allows you to manipulate such a park of servers and applications.

The transformation of the profession of ops is ultimately not a questioning of the intrinsic values of it. It is rather a change in the object they focus on. Instead of becoming attached to machines, it is now about becoming attached to the automaton (and to the code that guides it) which keeps them alive, while retaining the same concern for a job well done, this flavor that give us the pleasure to rise every morning.

What about tomorrow?

You are proud of your work, yet new challenges are coming ahead: cloud (IaaS, auto-scaling, PaaS), containerization (Swarm, Kubernetes ...), Application clusters (MongoDB, Cassandra, Kafka, Spark ... .)… The difference? Even shorter containers lifetime, a cluster mechanism that participates in the livestock life. Finally, no matter, you should be ready: you have implemented all the good practices preparing you for changes (tools and technologies).