Joyful wind of change: A software craftsmanship short tale

This is the story of a team. A bunch of 11 aspiring software craftsmen who decided to change things around and get their job done in a better way. The story takes place between the 30th and the 50th iteration of the development process of a software. This software is a website serving over 2 million regular users and providing legal information and services to 65 million French citizens.

Chapter one: Start from what hurts and set a direction

Leaky pipeline

It is normal that the build fails. There are some automated E2E tests that fail randomly. Just try again, hopefully it will work next time.

This was the common answer to all new developers who struggled passing their first user story. And for information, the build then used to be about 45 minutes long. Almost nobody would get shocked anymore, as if everyone just got used to the pain.

Starting from the 30th iteration new people joined the project. With all the fresh blood in the team, this situation became less and less acceptable. So, we decided to sit for one hour and discuss about our pains and how to deal with them.

Initially, this workshop was supposed to focus on enhancing our development flow. But it was no surprise when the test strategy became our main subject. The 45 minute unstable build was pointed by almost everyone.

Here is a non exhaustive list of pains that have been raised:

- Build duration

- Instability

- Randomly fails

- Depends on external systems

- Hard to maintain

- Too many integration testing

- Unknown test coverage

- Lack of confidence

- Long feedback loop

- Losing time analysing the build failure which is often not due to a regression

We first thought to just run tests once a day instead of doing that continuously to validate any change to our code base. Fortunately enough, we did not follow this direction for a long time, since nobody was yet ready to surrender to mediocrity. But we soon figured out that there was something wrong with the way we were writing tests. Even if End-to-End tests would simulate a real user and seem pretty easy to write, we could not rely our entire test strategy on them anymore. They had already cost us too much so far.

However, not everyone in the team shared the same experience regarding tests. For instance, differences between unit and integration tests were not clear for most of the team. But that was an opportunity to get things clearer.

We started by defining a common vocabulary and the intention behind each test category:

- Unit tests: To test a unique behaviour of a code unit (i.e., mostly class in our case)

- Integration tests: To test collaboration between classes and components

- End to End tests: To test a functional scenario (the app is considered as a black box and we test it from the user perspective)

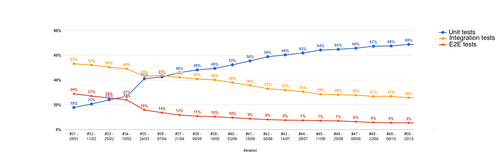

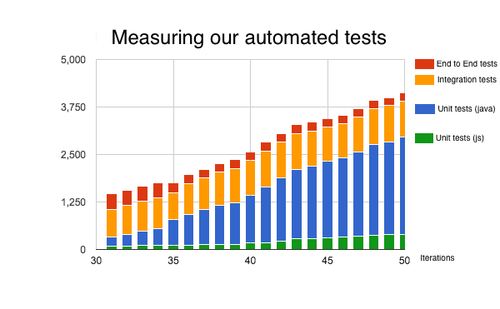

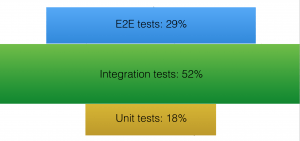

After that, we set a direction to move towards and gather all our energy. We needed to test faster and more reliably. Which brought us to the same newly born desire: more Unit tests than Integration tests than End to End tests. Let’s be clear: we did not invent the testing pyramid model. We have just adopted it collectively.

Once we decided to take care of our testing pyramid — Which at that time looked more like a UFO —, we needed to define how to do so. But this is another story. The one hour workshop was over.

The journey

How many iterations are you ready to give up in order to improve your development process?

The challenge was not to stop making features and start writing tests until we got a perfect pyramid. Taking care of the pyramid never was the purpose. It was more like a tool we had collectively adopted to help us deliver more value, more reliably and faster. And sometimes taking a step backward can be the best way forward.

As we would not stop writing code, we had to make sure not to get things any worse. So we thought about using TDD, a coding technique that consists of writing tests before writing code. The problem was that only a few team members were familiar with TDD and used it everyday. Most of us barely knew what the acronym stands for.

And here came a little miracle. Somehow, and among all the urgent upcoming very important features, the tech lead managed to persuade our sponsors to get a 3 day TDD training for all the team. Not only the "bad students" in the developers class got trained, but everybody was involved.

In other words, during 3 days we did not move any post-it in our kanban. We came to the office and, instead of talking about user stories with the product owner, we focused on red - green - refactor cycle with the trainer. And instead of coding features we would code a Connect Four game.

After the training, everyone was keen to TDD his next ticket. When I look back to what happened after, I think it was an important turning point. Our team is now more faithful about what we can accomplish in the future. However, we still had to overcome our legacy. And we knew that it would have been more like a marathon than a sprint.

We realized that we would not have to bear the burden of bad tests for a long time as soon as we started deleting some of them. Oh yes! We did so, and we were not even afraid. We were not obsessed about testing everything, anymore. Instead we were focused on testing things in the right way.

The most painful tests were the first to get dropped. And by the most painful ones, I mean those which failed more often. A few E2E tests were also quite easy to remove, as they were just unnecessary. There was not much of value on testing each business rule at this level.

For the tests we were not able to easily drop we created a test-to-shot table next to our Kanban. Whenever a build failed because of a test, we wrote the name down or add a stick next to it if it was already there. The tests on the table were added to our backlog and got prioritized according to the number of sticks.

Handling these tickets was mainly about answering a few questions:

- Does it match a user scenario?

- What is the intent of the test?

- Does it provide any additional value to existing tests?

- Is it possible to move it to the bottom of the pyramid (i.e., replacing it by integration and unit tests)?

We relished each victory no matter how small it was. Things went quite fast, indeed. Build after build, story after story, and sprint after sprint, we could clearly see the shape of our pyramid.

Deleting bad tests was not the only way to reverse the trend. We have written far more tests than before (see the figure below). But the ones we wrote were running faster. The 45 minute build is now 13 minutes long.

Chapter two: Keep learning and sharing

A virtuous circle

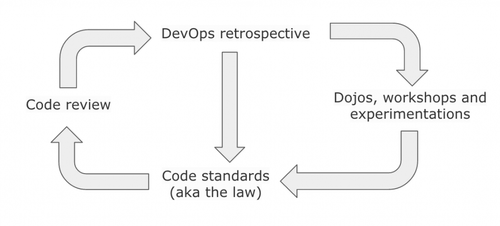

How could we improve things?

Improving continuously became our new obsession. By improving the way we tested, we became more confident about our code. And by adopting TDD, coding became more and more fun.

But beyond the development process, communication was the key to make the code cleaner, to let our design emerge, to manage our technical debt, to create new apps… And above all, efficient communication led us to define our own way to do so.

We all know that communication between developers can sometimes be a dangerous exercise. Passionate developers talking about code may lead to endless conversations. On the other hand, avoiding communication will certainly not lead to any change and will lock the team repeating the same mistakes over and over.

Code review: Find defects, spread knowledge and challenge ideas

At the beginning our code review used to look like: "I’ve taken a look at your code. It is quite OK. I’ve just modified a couple of things and it is ready to be merged".

In this discipline too, we have walked a long way. Here is the way it works now.

The reviewer reads the code and tries to understand it. Whenever he finds something wrong or not easy to understand, he writes it down on a piece of paper. He tries to localize the defects, which may be functional (how the code accomplishes the desired feature) or technical (does the code follow our code standards? Is there a risk regarding our non functional requirements?). After that, the reviewer adds his suggestions to solve those issues, and comes with his check-list to discuss with the author face to face.

The conversation is pretty simple: for each point in the list, the author can either accept or reject it. In case of rejection, he exposes and explains his point of view. Here, the reviewer can either agree and just drop the point, or there can still be a divergence. When this happens, all the team may be interested in the discussion. This is why the point is removed from the review and becomes a topic to be collectively addressed during the DevOps retrospective.

What is a DevOps retrospective?

Before defining what it is, let's start by what it is not. A DevOps retrospective is not:

- The sprint retrospective (which involves the whole team and during which we try to enhance our process for the next sprint)

- The developers supreme court

It is a ritual that allows us to take decisions and to share about our code and infrastructure. In addition to the sprint retrospective, we have adopted this ritual to focus on technical issues. This is a one hour meeting between DevOps only (all the team members co-work on development and infrastructure).

We first start by dot voting the topics in the retrospective backlog — each team member has 3 votes to dispense. Then we address topics starting from the most voted one. Each topic is time boxed from 5 to 15 minutes, depending on the topic itself.

When the time is over, each topic can have one of the following outcomes:

- A specific subject has been presented

- A new code standard is adopted by the team

- A technical task is created and added to our product backlog

If we still need to dig deeper into a specific subject, then we will schedule a dedicated workshop. By the way, our team loved these workshops, since they often gave us the opportunity to code together. It was pretty fun and we have experimented different approaches such as randori.

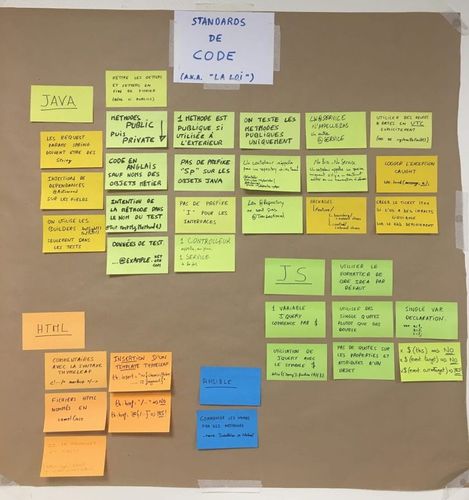

Why code standards?

We strive to write clean code for both machine and humans. Code standards help everyone in the team read and write code. Moreover, once a standard is displayed on the "law" table, it helps avoiding endless conversations about naming and conventions. In other words, standards are the best way to prevent the parkinson’s law of triviality and this allows us to focus on what is more fun!

How the design has emerged

This is what Wikipedia says about emergent design in agile software development:

Emergent design is a consistent topic in agile software development, as a result of the methodology's focus on delivering small pieces of working code with business value. With emergent design, a development organization starts delivering functionality and lets the design emerge. Development will take a piece of functionality A and implement it using best practices and proper test coverage and then move on to delivering functionality B. Once B is built, or while it is being built, the organization will look at what A and B have in common and refactor out the commonality, allowing the design to emerge. This process continues as the organization continually delivers functionality. At the end of an agile release cycle, development is left with the smallest set of the design needed, as opposed to the design that could have been anticipated in advance. The end result is a simpler design with a smaller code base, which is more easily understood and maintained and naturally has less room for defects.

In other words, don’t bother yourself with big upfront design. Instead, focus on making software working properly and if you craft it well, design will naturally emerge from refactoring cycles.

In real life, many teams fall into the trap of easiness. That is to say, by referring to the same example in Wikipedia article: once B is built nobody will look at what A and B have in common and refactor out the commonality.

Here are some actions that, I believe, have helped us doing a better job when it comes to let our design emerge.

Don’t be afraid of talking about design

As we saw earlier, talking about code might be dangerous. It’s easy to understand that talking about design can be even more. But talking about design helps seeing the big picture of the software we are making. This provides us with a clearer view of its boundaries and we get a better understanding about what should be decoupled and where abstractions and details belong to. This also helps us to define how to structure our code and to set a direction for our energy to go towards.

We held a few workshops on this topic. And even when talking about design, we were never too far from the code. One of the way to refactor the code is to split fat classes into more skinnier ones. There are plenty of reasons to do so: reuse reasons, SOLID reasons or simply to divide and conquer the complexity. Obviously enough, this produces a large amount of collaborating classes which may confuse the developer. The aim of the workshops was to imagine how the components we were building should have looked like. Doing this exercise provided us with concrete answers about how the classes should be structured in packages.

In the end, talking about design leads sometimes to decide about a direction to move on. While performing this exercise, the need to remain pragmatic and avoid dogmatic rationalization should be kept in mind. The keyword to start with should always be "why".

Apply the boyscout rule

Always leave the campground cleaner than you found it.

Let’s get back to the example of the pieces of functionality A and B and the need to look at what they have in common and refactor out the commonality. This is where the boyscout rule applies. Leaving the code "cleaner" than you found it, should be the aim. And this can lead to spectacular changes.

The main trap is to think you need to start a big bang refactoring. This is not what the boyscout rule is about, what we want to leave cleaner is the campground not the whole forest! Big refactoring is not even Agile. Again, over-refactor and over-design are not the purpose. If this would not help deliver value more reliably and faster, then YAGNI (You Aren't Gonna Need It).

Chapter 3: Happily ever after

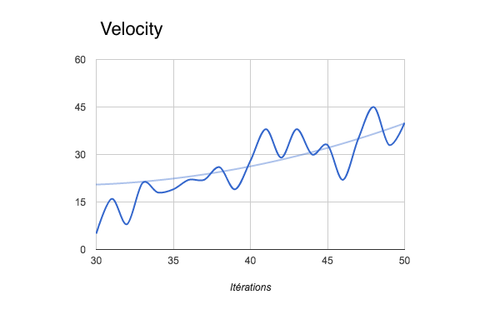

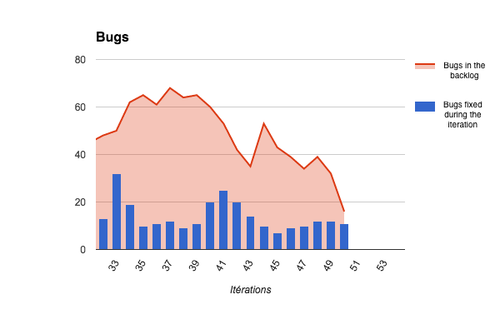

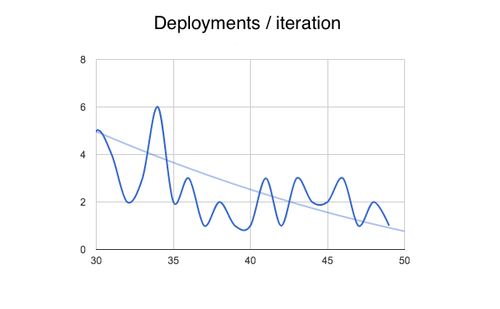

To conclude, let’s take a look to what happened around during this 20 iteration journey.

The team has delivered more features (knowing that, the number of features follows the same trend as velocity).

We have far less bugs.

Our delivery process is more stable.

And by enforcing the collective property of the code, everyone in the team is happy and can take a break whenever he wants. Vacations are no more a problem to deal with.

We do not depend on 10x superstars developers. Instead, we have built a 10x team and we trust it.

Why have I written this article?

Beyond the philosophy, I wanted to share a concrete implementation of software craftsmanship mindset.

It is not a magic formula. It is just about sharing the pride of a group of software professionals getting their job done in a better way.