I am a Developer: why should I use Docker ?

I have noticed that containers are increasingly popular, and that the IT community nowadays is particularly enthusiastic about Docker. Colleagues keep trying to convince me that containers will make my daily tasks quicker and simpler. But how?

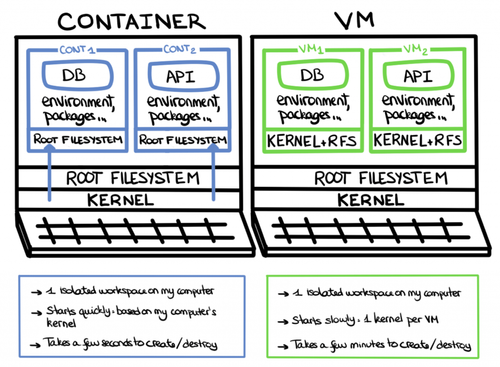

First of all, what are the differences between a Virtual Machine (VM) and a container?

I now work on Docker. This is the tool that democratized containerization and the most popular one. Many open source tools are based on it, such as Molecule (used to test Ansible code), or Kubernetes (used for container orchestration).

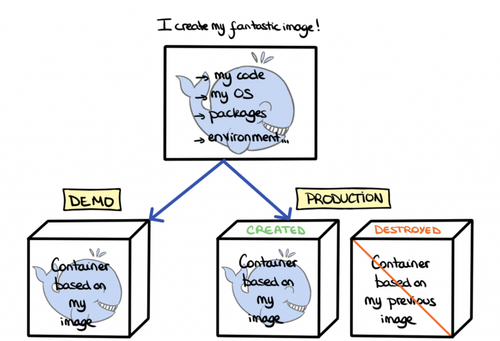

There are two main concepts to grasp to understand how Docker works: images and containers. An image is a snapshot of your application, installed with all its dependencies and ready to run. This image is then used to build containers, “boxes” in which the code runs. The container can be used and then easily destroyed.

You can try comparing it to object oriented programming: an image would be a Class and the container would be an Instance of this Class.

On the whole, the container principles are quite simple:

- one process per container

- a container is immutable

- a container is disposable

An iso-production dev environment

"A bug? It’s not my fault, it works on my computer!"

Whether Developer or part of the Operations staff, everyone has heard the infamous saying “it works on my computer”. The problem is generally due to differences in the configuration files (problems concerning versions, rights…) which are hard to debug.

The first step on the way to environment standardization has been achieved through the use of virtual machines. Unfortunately, the configurations and updates need to be repeated on each new machine or environment.

If my applications are stateless, configured through environment variables, and respect the 12factor standards, my containers will all be the same and have the same environment variables. This means that if my tests are validated for one container, it will be the case for all the containers that were built from that same image. Indeed, using containers does not exempt you from writing tests!

Therefore, the new tested image will be used in production, and I can be sure that it will behave the way I want it to. Similarly, if I encounter a bug in production, I simply need to build a container based on that image with the production configuration to be able to reproduce it.

Note that even if you do not use containers in production, you can still use containers to simplify the application’s development.

Nevertheless, you must keep in mind that it you wish to start learning how to use Docker, it will take some time. It is for you to decide if your priority is to work on a simpler architecture or to work on an environment that matches the CI/production as early as possible.

A simplification of some of my daily tasks

"It makes onboarding so much quicker!"

Today is my first day on the project. Fortunately, my team uses Docker! I only need to install Docker, start a container based on the latest version of the code, and I am ready to go! I am now sure that I am working with the same tools and the same versions of these tools as the rest of the team, whatever OS I use.

This also greatly simplifies upgrades, as everyone is sure that the whole team is up to date.

"I do not wish to pollute my computer with all the tools I download for my various projects."

As a developer, I get to work on various projects, and therefore accumulate different tools. I now face a problem: I have many versions of the same tool installed on my computer. For instance, I have two versions of PostgreSQL. When I switch projects, I need to deactivate one version and activate the other. I lose precious time doing that, and I am never sure I have uninstalled everything correctly if I decide to delete one of them.

Using a containerized database solves my problem: it is easy to install and to use, and I am certain I have correctly deleted everything when I destroy my container.

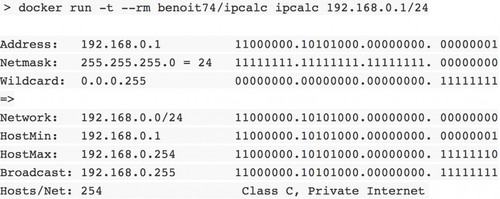

Borrowing your neighbour’s tools

Let’s take an example: my computer is a Mac, but I would like to use a very useful Linux tool: ipcalc. Before learning about containers, I would have resigned myself to finding a similar Mac tool, but now, I can use ipcalc directly without any trouble.

“I do not want to reinvent the wheel”

One of the advantages of containers is that you have off-the-shelf solutions. It is indeed unnecessary to code a new solution from scratch to solve a problem that has already been addressed.

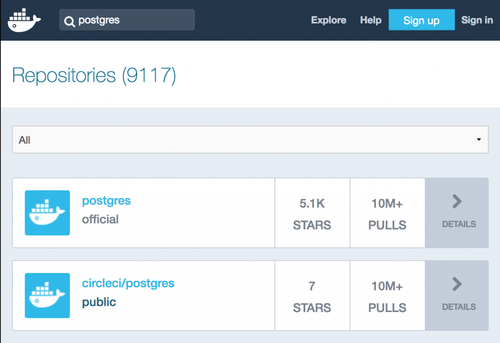

There are many places - called “registries” - where you can find images from which you can build containers. As I use Docker, I went onto https://hub.docker.com/ to discover all the existing images and how to use them. Most images are open source, so anyone can contribute to improve them.

Some indicators can help quickly select the best image you need: the number of stars (given by the users), the image’s download count, the date of the last updates, and when it was last downloaded.

Regardless of these indicators, make sure to always check what is inside the image as it could contain malware (backdoored docker images).

But what if you do not want to publish your images publicly on docker? No problem! You can create your own registry based on a Docker image (for example: https://docs.docker.com/registry/) and then deploy your images there.

“POCs don’t frighten me anymore!”

I can work on my projects without fearing to download useless packages when I need to compile a file, or to download a tool that conflicts with those I already have on my computer. I just need to use a container and destroy it afterwards and my computer will be as good as new.

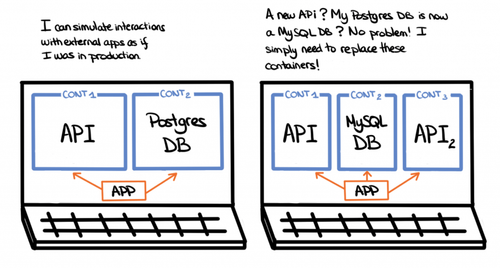

I am also more prone to do POCs or to test new tools, as I can install them in a container that I can delete as soon as I am done with it. It is very quick - creating or destroying a container takes only a few seconds - and it enables me to simulate the exact environment on which I wish to work. I can also easily simulate calls to a database or to an API. If it does not work, it takes me only a few seconds to destroy my container and then recreate it!

My feedback loop is considerably reduced

“Testing my app is so complicated!”

On most of my projects, I have an application, and it calls an API and a database. I would like to be able to test all of these interactions: it is possible to do so on my computer, but much more complicated when it comes to continuous integration.

As seen before, Docker enables my to quickly pop up a database. In the same way, if I have access to the API’s code, I can create its image and containerize it. I can thus generate a test API in mere seconds, and use it in my continuous integration. If I do not have access to the API, I can always mock it and use the mock in the same way.

Docker thus allows me to quickly and simply recreate my project’s topology.

“It takes so much time to test my app!”

Until now, in order to test my code in an environment that matches production, I asked the client to provide me with a VM. This could take a few weeks. What a waste of time! And if ever I made a mistake in my configuration and wished to start over, I had to go through the process all over again - and pay additional fees.

A container enables me to do so without needing the client’s VM, and to confirm that my app and its dependencies communicate correctly. Keep in mind that a VM is longer to build and destroy than a container, and it blocks a fixed amount of the host machine’s resources when it is launched, whereas a container uses only the resources it needs.

Conclusion

Advantages

As a developer, using containers can make my life easier:

- It is easier to share the same environment between developers.

- The dependencies are the same, whatever the environment (I am sure that my colleagues and I have the same versions of the tools we use).

- Docker is cross-platform: OS differences between devs are no longer an issue.

- I work on an isolated environment.

- I have access to off-the-shelf solutions thanks to the open source images available online.

- I can do POCs quickly thanks to Docker images without polluting my computer.

- Containers are quicker to build and destroy than VMs.

- It accelerates the feedback loop and therefore the deployment in production.

- I forces me to apply development best practices: 12factor app

- It is easier to share the same environment between developers.

To consider

Careful not to rush headlong into Docker:

- Not every application can be containerized: they must respect the 12 factor app rules.

- Docker offers many functionalities you must experiment before creating complex topologies.

- Docker can be less pertinent on smaller projects

- Docker introduces an extra complexity: a bug can be caused by my configuration, my Docker topology, or by my app.

- Containers are based on my computer’s OS’ Kernel:

- If I use specific functionalities from my Kernel, my image might not be compatible with another machine (ex: images built for Windows cannot be used on Linux and reciprocally).

- A containerized process can exploit a weakness in the Kernel and impact all the other processes in the host machine.

Keep in mind that Docker is only one tool amongst many others: RKT (CoreOS), or even Windows Containers (Microsoft) for exemple. It is up to you to chose yours!

Sources

- https://www.contino.io/insights/beyond-docker-other-types-of-containers

- https://www.aquasec.com/wiki/display/containers/Docker+Alternatives+-+Rkt%2C+LXD%2C+OpenVZ%2C+Linux+VServer%2C+Windows+Containers )

- https://www.lemagit.fr/conseil/Conteneurs-VS-VM-les-entreprises-pronent-la-mixite

- https://blog.wescale.fr/2017/01/23/introduction-a-rkt/

- https://docs.docker.com/engine/reference/commandline/run/

- https://docs.docker.com/registry/

- https://12factor.net/

- https://www.lemagit.fr/conseil/Comprendre-les-enjeux-des-containers-as-a-service

- https://deliciousbrains.com/vagrant-docker-wordpress-development/

- https://www.bleepingcomputer.com/news/security/17-backdoored-docker-images-removed-from-docker-hub/

- https://blog.octo.com/applications-node-js-a-12-facteurs-partie-1-une-base-de-code-saine/

- https://github.com/benoit74/docker-ipcalc