Hybrid Cloud and Amazon based IaaS - 2

Following the previous article (it is true :-)), we will consider the Amazon Web Services - AWS - to treat 3 themes related to hybrid IaaS Cloud : the connection between "on-premise" IT and a public cloud infrastructure, the policy for management of VM images and finally the licenses.

What connection between "on-premise" IT and the infrastructure of public Cloud ?

2 complementary philosophies are possible to make communicate applications or components distributed on distinct infrastructures (in the geographical meaning):

- By Software interaction : create wrappers (web-services) to establish communications between different software blocks. These web services could be secured by SSL to ensure confidentiality of exchanged data between the internal IT and public VMs.

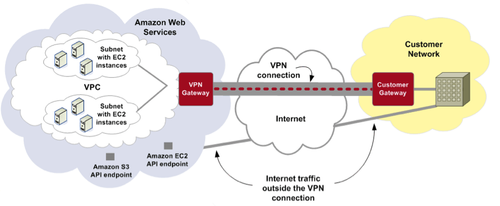

- By integration and securing network : the different infrastructures are considered as an unique set from the network point of view. Using mechanisms like secured tunneling, every cloud platform is then an extension of the "on-premise" IT. Amazon, unlike other cloud actors, proposes Virtual Private Cloud service which can create an IPsec tunnel (authentication of both extremities and encryption of transported information) between an Amazon Data Center and your 'on-premise' IT. According to Amazon, this functionality requires 7 % of additional use of network bandwidth due to encryption and encapsulation.

source : http://aws.amazon.com/

This service needs an advanced router, compliant with IKE (Internet Key Exchange) protocol to exchange security attributes in relation with the VPN setup, or BPG (Border Gateway Protocol) Peering to define network routes between Amazon data center and your infrastructure.

The main advantage of IPSec tunnels' usage is beyond the transparency provided to applications,on Amazon side, VMs are not accessible from public Internet, but only by the VPN entry point. About the drawbacks, the setup of a VPN can imply important modifications of the network architecture (rules of filtering, sub-networks, distribution of bandwidth ...).

Currently, VPC service is in beta release with the following limitations:

- Some AWS like auto-scaling or Elastic Load Balancing are not compatible with VMs running inside a VPC. This will lead you to deploy machines configured by yourself to accomplish these functionnalities.

- IPV6 is not supported.

- The virtual router (on Amazon side) does not manage the concept of security groups - Amazon notion to determine who are the users allowed to administrate AWS resources (VM, storage ...).

- A single private cloud with a maximum of 20 sub networks can be created.

What policy for the management of Amazon Machine Images ?

To launch a VM, you need an image - Amazon Machine Image (AMI). So, the question is if a company must manage an hundred or more of VMs, how must it manage the AMIs at the origin of the VMs ?

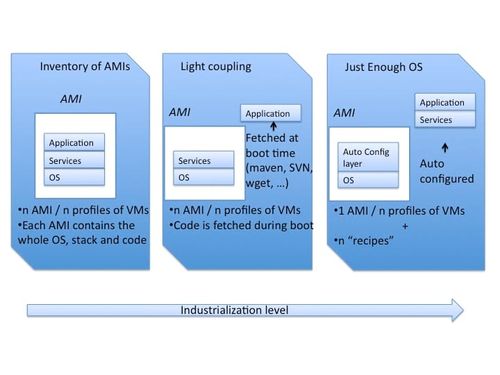

Amazon proposes 3 options : inventory of AMIs, Light coupling AMIs and "Just-Enough OS AMI".

The inventory of AMIs is the first approach. An AMI if associated to a "type" of VM : OS, technical stack and code are fixed. Several VMs can be started from the same image but suppose you have an "application server" VM and a second which is "database server" : you will have two different AMIs. As the company will adopt the Amazon cloud, the number of AMIs it will have to manage will increase. Problems will occur during updates : if you must apply a patch on an OS used by 30 AMIs, 30 AMIs should be created again once the update is done. Same thing for the technical stack and the applications!

The second method is to introduce a light coupling between the image of a VM and its final state. Now, the application is loaded during boot time of the VM : an AMI which contains an application server will load JEE archives on a previously set location(S3, file server ...). With this configuration, we avoid to bundle a new AMI to integrate new release of applicative binaries. For JAVA applications, this mechanism can simply be a Maven Command executed at startup to retrieve artifacts from a repository inside the company. In case of other technologies, we can imagine using a software versioning system (e.g : a SVN export) or a simple HTTP download (wget).

To finish, the last solution is to create AMIs containing only OS and the mandatory environment to run an automatized configuration tool like Chef, Puppet, CFEngine. During the launch of the VM, a parameter will indicate which configuration should be used (list of required frameworks, the applicative code, ...) and the VM will configure itself using scripts provided by the configuration tool.

How to proceed for licenses ?

The migration of licenses can be made in three distinct ways :

- Many editors have agreements with Amazon allowing the final user to perform a smooth transition towards execution on EC2 instances. This model called "Bring Your Own License (BYOL)" is based on the availability of pre-configured AMIs, in partnership with editors who have integrated licence mecanisms. The license you have used since 1 year, still usefull for the next 2 years will be available for certain AMIs on EC2. Among the partner editors we find Oracle, Sybase, Adobe, MySQL, JBoss, IBM and Microsoft.

- In addition to the "BYOL" model which is much close to the classical licensing model, Amazon proposes a "Pay-As-You-Go" model based on the "DevPay" service. In this case, licenses have been built with partners to integrate the "on demand" capability of EC2 : costs due to licenses are applied on the execution time of the VMs, that is to say the AMIs which contain the licensed software generate an extra charge of the hourly price. Support prestations are often included in these AMIs. Among the partners, we find : RedHat, Novell, IBM, Wowza.

- Finally, the third solution is to use an application in SaaS mode. Amazon introduces editors whose SaaS offers are hosted on AWS and provide migration kits to facilitate the transition to this model. Subscription is often monthly. We can list Mathematica, Quantivo ou Pervasive.

Conclusion

Several actors of public Cloud can intervene inside the same hybrid cloud. Reasons can be:

- financial: it can be interesting to launch VMs by a provider A and to store data using a provider B.

- historical: the offer of a provider A is well mastered by IT teams. POC or an hybrid cloud already are deployed on infrastructure of provider A. Yet, provider B has technical services which are unavailable elsewhere.

- others: the provider B guarantees a SLA greater than one of the provider A.

- ...

However, each platform has its specificities, its own services, its proprietary APIs and the possible answers presented in this article, based on AWS could not apply to other IaaS offers and reciprocally.

Even though some initiatives of partnerships (between VMWare and Google for example) favor the development of standards, Cloud market is far from offering common services and APIs. Thus the multiplicity of used offers leads to increase the need of internal skills and an relative adhesion to the cloud providers. Ideally, we will limit the number of CLoud actors, prefering partners apt to answer the forthcoming needs, in terms of services and guarantees.