Good news: Moore's law is dead, long live EROOM!

For over 50 years, digital technologies have developed at a dynamic, world-changing pace thanks to the exponential growth in the computing power of microprocessors.

Gordon Moore, co-founder of Intel, summed it up in a nutshell: "the number of transistors in a semiconductor doubles every 2 years". This is what we now know to be Moore’s Law. Though he first spoke his law in 1965, it really took until 1971, with the release of the first commercial microprocessor, the Intel 4004, to become a pretty universal truth.

But now, as surprising as it may seem, we have to face the facts and accept another universal truth: Moore's Law is dead.

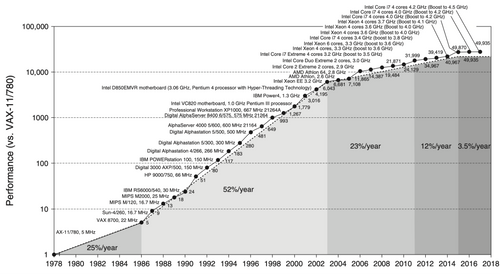

This graph from Computer Architecture: A Quantitative Approach, the reference book on the subject, shows as much:

*Growth in processor performance over 40 years

*Source : Computer Architecture: A Quantitative Approach, sixth edition - p. 3, figure 1.1

The graph shows that, since 2015, x86 processors (which are at the heart of PCs and servers and are heirs of the Intel 4004) have hardly progressed in performance. Although we design them in increasingly complex ways, with more and more transistors; more cores; and the capacity to process longer and longer words (4, then 8, then 16, then 32 and finally 64 bits), the power these processors deliver hasn’t been doubling every two years in the way Moore's law predicted for quite a while. In a sense, Moore's law is still true and we do continue to increase the number of transistors in a processor, certainly at a lower speed, but the problem is that in x86 processors this no longer translates into an increase in power.

Related laws are also running out of steam

There are two related laws that accompany Moore's law.

The first is that the power consumption of each transistor must decrease with each generation. Otherwise, as we multiply transistors, the consumption of a processor would explode ― there are 22 million times more transistors in a recent processor than in the Intel 4004, which consumed 0.5 W. If it consumed 22 million times more, a modern processor would require 11 MW, and it would take a nuclear power plant to run 100 processors!

The second law is that the unit price of a transistor must also decrease with each generation. In 2023, an Intel 4004 was worth $450. A modern processor with 22 million times more transistors would therefore be worth $9,900 billion.

These two laws have held true for a long time but, unfortunately, they have also run out of steam. This is how some modern x86 processors cost several thousand euros and consume up to 350 W.

What does the end of Moore's law imply?

We could feel panicky about the end of Moore's law. But it’s actually an opportunity!

For as long as Moore's law has existed we have been able to validate Wirth's law, which states that software slows down as quickly as hardware speeds up. It was once said "What Intel gives you, Microsoft takes away". There is no shortage of examples illustrating Wirth's law. An Apple 2 from the 1980s is 5 times faster than a modern Windows computer when it comes to keystroke latency (the time it takes for a keystroke to register and appear on screen). display a character that was typed on the keyboard. Web pages are 150 times heavier than they were 25 years ago.

The reason is quite simple: since development time is expensive, we’d rather use it to create new features than to optimize existing software. It’s a classic case of double jeopardy ― software becomes bigger (with more features that are not always useful) but very rarely optimized. As long as Moore's law held true that didn’t much matter, a new generation of computers would arrive and speed up software that had become too slow. All we had to do was change the hardware, even if it still worked very well; which is what we did for 50 years.

The real-life end of Moore's law

Like many computer scientists, I am my family’s go-to IT guy and maintain everyone’s computers. This is how my family fleet still has machines with Intel processors from 2011 and 2012 that still run MacOS and are used daily for office tasks. Here is a screenshot I took while reinstalling applications to switch the computer over to a new user:

After 12 and 13 years respectively, these machines are still fully satisfactory and run modern software. A few years ago, this was a situation that was unthinkable! Thanks to the end of Moore's Law, it is a reality.

Making hardware last and reducing the environmental footprint of digital technology

Using computers for 12 years instead of 2 or 3 as in the 2000s is not insignificant. We are all aware of climate change and of the fact that we must quickly reduce the environmental footprint of human activities, including digital technology.

For digital technology, most of this footprint comes from the manufacturing of hardware: this is where the most water, resources, and high quantities of energy are consumed, and where the most greenhouse gases are emitted. This observation is even more valid in France where the electricity that powers computers comes from very low-carbon energies, namely nuclear followed by hydraulic dams and other renewables.

How to innovate with such constraints?

If Moore's law no longer applies to x86 processors and if we have to reduce the environmental footprint of digital technologies, can we continue to innovate? Or do we have to reduce our use of digital tech?

Yes, it is possible to continue to innovate while keeping existing hardware and without buying new ones. To do this, we can count on software optimization.

In all layers of information systems, there is lots of software that hasn’t been optimized because of Wirth's law. Some are fairly energy-efficient and sparsely used. Others are very poorly optimized and consume tens, hundreds or even thousands of times more than they should. I have seen applications accelerate by a factor of 60 on a simple optimization. I have seen Machine Learning systems go 5400 times faster after good optimization. And the best example (or is it the worst?) is Matt Parker, who saw his work corrected to run... 408 million times faster!

EROOM's Law

Just as Moore's Law was Intel's decision to invest in research and development and therefore in innovative production plants to support the improvement of semiconductors, we can decide to invest in software optimization. We can decide to optimize software by a factor of two every two years. This means that this frees up half of computing resources every two years. So, every two years, we end up with twice as many resources as needed. These resources, previously wasted and now freed up, can be dedicated to new applications and new uses all while avoiding the renewal of hardware and thus drastically reducing our digital footprint. It would allow developers to improve their skills by doing better-quality work, with the added bonus of being able to take pride in it. Win-win.

"EROOM" is Moore, backwards, but is also a "backronym" meaning "Effort to Radically Organize Optimization Massively" (sorry).

The Plasma methodology: EROOM at the scale of the information system

To deploy the EROOM law at the scale of the information system, we can use a methodology called PLASMA (for Portfolio-Led Application Sustainability Management). It consists of reviewing the IS application portfolio with an APM (Application Portfolio Management) approach to determine where optimization investments should be made, often on the most resource-intensive, large-scale applications whose business value is the most important. Conversely, we can prepare for the decommissioning of the most resource-intensive and least valuable applications.

In conclusion

The whole point of the EROOM law, deployed within an IS with the Plasma methodology, is to achieve three objectives that may have seemed contradictory until now:

- The environmental footprint of the IS is divided by 4, which plays an important role while the CSRD regulation is being deployed.

- In terms of satisfaction of the developer teams, everyone is proud to do quality work, an essential point in terms of employer branding in a tight job market.

- The IT department, by reducing its hardware budget, can allocate a larger share to new projects (Greenfield) and continue to innovate in digital technology.

This article is a translation from French of the La loi de Moore est morte et c'est une bonne nouvelle, published in June 2024. For those interested in a podcast on the same topic, please head to Green IO #50 Is Eroom's law the future of Moore's law?