Face recognition in RIA applications

Face recognition is exciting machine learning task which during the last decade has brought some good results, used mainly in security applications to perform person identification. However it used to be reserved only to university research and implemented only by companies specializing in this field.

But the time invested into this field by universities, companies and independent developers has brought its result in the means of several open-source libraries which any developer can use to perform image processing tasks including face recognition. Take a look at the complete overview of existing computer vision algorithms and libraries.

This article will show you how to incorporate face recognition into your web page using EmguCV image processing library and Silverlight Web Camera features. EmguCV is a .NET wrapper for OpenCV library, written in C++ by Intel and published as open-source. The method which will be used is Eigenfaces algorithm. If you are interested in the details of this algorithm, please refer to the previous article on this topic: The basics of face recognition.

This post describes quite simple application which consists of two parts - Silverlight client, which is responsible for the image capture and eventually pre-processing and the server part which performs the actual face detection and face recognition.

Capturing the image with Silverlight

Since the version 4 Silverlight gives us the possibility to use the camera to capture images or videos using the CaptureSource class. The following snippet shows how to access the camera and show directly the capture picture.

CaptureSource captureSource = new CaptureSource();

captureSource .VideoCaptureDevice = CaptureDeviceConfiguration.GetDefaultVideoCaptureDevice();

captureSource .CaptureImageCompleted += new EventHandler<CaptureImageCompletedEventArgs>(captureCompleted);

if (captureSource .State != CaptureState.Started)

{

// Create video brush and fill the WebcamVideo rectangle with it

var vidBrush = new VideoBrush();

vidBrush.Stretch = Stretch.Uniform;

vidBrush.SetSource(captureSource);

WebcamVideo.Fill = vidBrush;

// Ask user for permission and start the capturing

if (CaptureDeviceConfiguration.RequestDeviceAccess())

captureSource .Start();

}

Notice that I have to set the callback for the CaptureImageCompleted event. That should not surprise you, if you have worked with Silverlight before. Blocking of the user interface is not allowed, and so all operations depending on external sources are asynchronous. Now lets take a look at what happens when the image is actually captured:

private void captureCompleted(object sender, CaptureImageCompletedEventArgs e)

{

var image = e.Result;

var coll= new ObservableCollection<int>(image.Pixels);

switch(appMode)

{

case AppMode.Recognition:

client.RecognizeAsync(coll,image.PixelWidth);

break;

case AppMode.Training:

client.TrainFaceAsync(coll, image.PixelWidth,TextBoxLabel.Text);

}

}

As a result, we obtain WritableBitmap object, which has a simple Pixels property of type int[]. This property holds all the pixels of the image in one dimensional array created by aligning all the rows of the image to one array. Notice that each pixel is presented as Int– this means that that we have a classic 32bits representation of color for each pixel.

We take this array and we send it to the server. When the application is in training mode, we send also the label, which should be added to the Image. If the application is in recognition mode, we send just the image and we hope to receive the label describing the person on the image.

Face recognition using EmguCV on the server

As described in the previous article, face recognition has two phases: Face detection, and actual face recognition. We will use EmguCV to perform both of these tasks. EmguCV is a wrapper for OpenCV library. This basically means that we can call all the functions inside OpenCV library without the need of using constructs such as DLLImport directive and without the need of knowing the structure of the OpenCV library.

EmguCV is surely more friendly for C# developers than OpenCV (at least we do not have to treat the pointers), on the other hand the documentation is not perfect. Several times you will have to address directly the documentation of OpenCV to understand the structures and methods. For this point of view it is important to understand the algorithms which you want to use from EmguCV/OpenCV, because you will have hard-time finding the documentation to understand what each method does.

Detecting the face

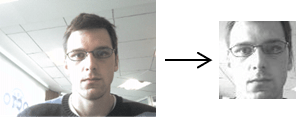

I have created a function which takes the array of pixels, and the size of the image, converts the picture to gray scale, detects the face, trims the rest of the image and resizes the resulting image to demanded size, just as shown in the following picture:

public static Image<Gray, byte> DetectAndTrimFace(int[] pixels, int iSize, int oSize)

{

var inBitmap = ConvertToBitmap(pixels, iSize);

var grayframe = new Image<Gray, byte>(inBitmap);

var haar = new HaarCascade(getHaarCascade());

var faces = haar.Detect(grayframe,1.2,3, HAAR_DETECTION_TYPE.DO_CANNY_PRUNING, new Size(30,30));

if (faces.Count() != 1)

return null;

var face = faces[0];

var returnImage = grayframe.Copy(face.rect).Resize(oSize, oSize, INTER.CV_INTER_CUBIC);

return returnImage;

}

The standard structure to treat images inside EmguCV is Image<ColorType, depth>. So from the previous code snippet you can see, that we are returning gray-scales image, where each pixel has 8 bits (byte structure). We first have to reconstruct an Bitmap image from pixels array and than create Image<Gray, byte> object from resulting Bitmap. In this step EmguCV converts automatically the image to gray-scale.

Next the face detection is performed using HaarCascade detection algrorithm. The description of this algorithm is not part this article. Once the face is detected (and if there was only one face), we copy the rectangle wrapping the face and resize it to demanded output size. It is important to use the same size, when adding the image to the training set and recognizing the face from the image.

For actual recognition EmguCV contains EigenObjectRecognizer class, which needs several arguments to be created:

- Array of images and corresponding array of labels.

- Eigen Distance Threshold – Eigenfaces algorithm measures the distance between images. This thresholds defines the maximal distance needed to classify the image as concrete person. Big values such as 5000 will make the classifer to return the closest match, even if the probability that the person has been recognized is quite small. Set it to 500 to obtain some reasonable results.

- MCvTermCriteria – is a class which represents OpenCV structure for terminating iterative algorithms. It is composed of two numbers the first being the number of iterations and the second one is demanded accuracy. For some algorithm it makes sense to iterate until the accuracy is not bellow certain threshold. For eigenfaces algorithm it is the number of iterations which is important and it will impact the number of eigenfaces being created. I have obtained some good results having around 40 eigenfaces for 100 images in the database, but this might depend on your scenario, quality and diversity of the images.

MCvTermCriteria termCrit = new MCvTermCriteria(40, accuracy);

EigenObjectRecognizer recognizer = new EigenObjectRecognizer(

trainedImages.ToArray(),

labels.ToArray(),

eigenDistanceThreshold,

ref termCrit);

Face recognition

The actual face recognition is a piece of cake if we already know how to create the recognizer. First we have to detact and extract the face from the image with the help of previously mentioned DetectAndTrimFace method. And the second step is to pass this image to the recognize method of the recognizer.

public String Recognize(int[] pixels, int size)

{

var imgFace = ImagesProcessing.DetectAndTrimFace(pixels, size,

var recognizer = ImagesProcessing.CreateRecognizer(folder, ...);

String label = recognizer.Recognize(imgFace);

if(!String.IsNullOrEmpty(label))

return label;

return "Could not recognize";

}

Adding the picture to the training set

This again is easy. Once we do the face detection and trimming, we will just see if there is a folder for the given label and if not, we will create one. One option is to use Guid as a name of file to be sure, that we wont have collisions.

[OperationContract]

public void AddToTraining(int[] pixels, int size, String label)

{

var faceImg = ImagesProcessing.DetectAndTrimFace(pixels, size, fixedFaceSize);

var directoryNames = GetDirectories();

//no such label - create directory

if (!directoryNames.Contains(label))

Directory.CreateDirectory(DIR + "\\" + label);

faceImg.Save(DIR+ "\\" + label + "\\" + Guid.NewGuid().ToString() + "_" + fixedFaceSize + ".jpg");

}

Performance

The face-detection phase is quite instantaneous. Using the HaarCascade algorithm to detect face in the image, we have a chance, that OpenCV already offers a set of HaarCascade features. The creation of these features is much more time consuming, than their usage.

The face-recognition phase is also not that time-consuming. In this example I was working with database of 100 images (10 persons, each having in average 10 images, each image being 80x80 pixels). In this setting the creation of the recognizer is almost instant. Surely the read access of images from the disk, takes more time than, the actual creation of recognizer (the eigenfaces algorithm).

If we have a database of pictures which does not change, we create the recognizer only once and than share it every-time there is a request for recognition. This of course is not possible when the image database changes. In that case we have to recreate the recognizer. There is no possibility to add new image to existing recognizer.

The bottleneck of this approach is still the network over which we have to pass the image from client to the server. To minimize the data which has to be transfer, take a look at the tip which shows you, how to perform the face detection on the client side at the end of the article.

Tricks and tips

Equalization of the image Histogram equalization is a technique to improve the contrast and adjust the brightness of the image. EmguCV gives us the possibility to call the equalize function which resides in OpenCV:

public static Image<Gray, byte> EqualizeHist(Image<Gray, byte> input)

{

Image<Gray, byte> output = new Image<Gray, byte>(input.Width, input.Height);

CvInvoke.cvEqualizeHist(input.Ptr, output.Ptr);

return output;

}

Take a look at the picture before and after equalization:

Creating additional images Generally the results are better when the training set is large. If we do not have enough images, we can try to create new ones by slight rotation or horizontal flipping. EmguCV gives us the possibility to call Flip or Rotate method on each picture:

var fliped = faceImg.Flip(FLIP.HORIZONTAL);

var rotated = faceImg.Rotate(angle, new Gray(0.3));

Of course we have to be careful only to use Horizontal flipping (faces upside-down would just confuse the recognizer) and to rotate just to some angle. More image treatment on the client side In the presented code, you can notice, that I am sending the image to the server, right after image capture, without any preprocesing. Because the default size of the image is 640x480 pixels. Knowing that each pixel is represented by 32 bit Integer this means that we are sending roughly 1200 kB to the server for recognition or training. Since images are resized later anyway, we could resize them directly before sending to the server. Resizing the images to 320*240 would reduce the size of send data to 300 kB (but of course we can do more). Unfortunately Silverlight does not have method for resizing images, however there is a great project on Codeplex, which contains several extensions methods for WritableBitmap, one of them being the resize method. Face detection on client side It is not possible to move all the face recognition process to the client side, from one simple reason: the recognizer has to, at some point, load and process all the images in the database. Performing this part on the client side, would demand us either to embark all the images into the client or transfer these images over the wire. Either of these options is not attractive for web developers. However the process of face detection could be moved to the client side, while it is not dependent on any big amount of external data. Great article which explains face detection in Silverlight is available as FaceLight project on Channel 9

Will we log to our bank using only our web camera?

Well the answer is no, face recognition is still not completely trust-worthy. Eigenface algorithm presented above is too much sensitive on the brightness changes and face-pose changes. When testing the algorithm, I obtained successful results in 60-70% of the total tries. However new algorithms have been developed combining several image processing and face recognition techniques and of course many companies are specializing in this field and develop software with much better accuracy.

Another problem is the fact that it is far too easy to present a taken photograph to the web camera and unless the system is capable of recognizing the real person from taken picture it will be cheated.

We will probably see mutual systems where the login and password will still be the main security check and face recognition will be used instead of the third confirmatory information such as RSA generated number or confirmation code.