End to end testing from the trenches with Protractor

However, they drag with them a bad reputation among developers: unstable, slow, costly, unmaintainable. In this article, we will try to be more pragmatic and enthusiastic about the topic. We will step above the basic introduction to Protractor (the most common testing framework in the Angular world) and dive into practical insights faced in real life situations, from the technical, the methodology and the human perspectives.

What is end-to-end testing?

For Techopedia, “end-to-end testing is a technique used to test whether the flow of an application right from start to finish is behaving as expected”.

For a web application, it commonly refers to automatizing a user centric flow of events (clicking and interacting across the application) and ensuring the correctness of the outcomes down the path. Those tests can be undertaken manually but the advantage of automation is to save brain power and ensure faster feedback loops to the development teams, hence shorter Time-To-Repair intervals. By opposition to unit or integration tests, they are aimed at running on a fully built version of the application and are decorrelated from low level implementation details.

Figure 1: the test pyramid, popularized by Mike Cohn, shows various levels of tests, from ubiquitous low level unit tests, directly targeted towards the implementation up to rarest automated end-to-end, or GUI, tests and even manual sessions. The pyramid reflect the numbers of tests to be typically written on each layer, as well of the implementation cost.

Protractor is a very popular framework for end-to-end web application testing. In practice, a typical test takes the form of (source):

describe('angularjs homepage todo list',() => { it('should add a todo',() => { browser.get('https://angularjs.org'); element(by.model('todoList.todoText')).sendKeys('first test'); element(by.css('[value="add"]')).click();

var todoList = element.all(by.repeater('todo in todoList.todos')); expect(todoList.count()).toEqual(3); expect(todoList.get(2).getText()).toEqual('write first protractor test');

todoList.get(2).element(by.css('input')).click(); var completedAmount = element.all(by.css('.done-true')); expect(completedAmount.count()).toEqual(2); }); });

What end-to-end testing is not?

As Mike Wacker puts it in the Google’s testing team blog, in theory, everyone loves end-to-end tests: developers, because they don’t write them; business, because they reflect the real user experience; testers, because they act at the user high level and reveal “visible” bugs.

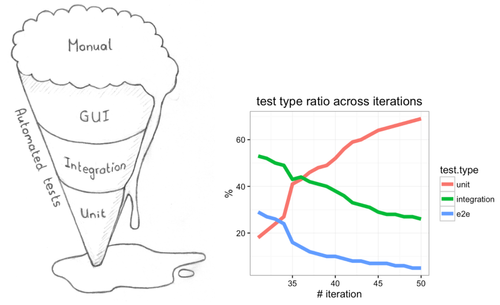

A common pitfall is therefore to rely upon those tests to catch most of the application bugs, soon turning over the pyramid (figure 2).

Figure 2: the inverted test pyramid (left). As yummy as the “ice cream” anti-pattern might looks, it soon reveals to be indigestible. However, any boat can be turned around (right): Wassel Alazhar posted a tale on how a team could revert the pyramid back on its feet (values are in percent): we see how the relative number of end-to-end tests decrease across time, mainly due to the surge of unit tests, but also the removal of end-to-end ones.

A real life situation

The experience shared in this article comes from various missions we have undertaken, as OCTO Technology consultants. Even though these patterns are recurring, most of the practical points described in the following sections come from one customer situation, and we shall spend a couple of paragraphs to brush the context.

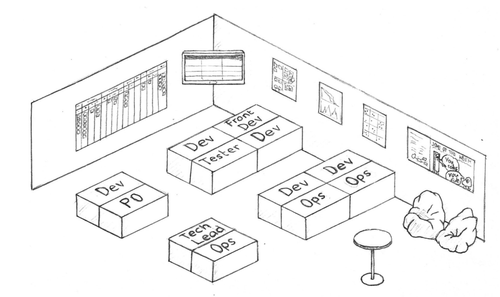

The environment there can be described as mature on an agile scale. The management levels are engaged, the team under scrutiny is a small dozen, collocated, mixing developers, ops engineers and a Product Owner (figure 3). The team follows a ScrumBan approach and information flows continuously amongst the party. To complete the picture, they steadily deliver value on its single product.

In our story, a couple of developers have been called to engage with a diverging stack of user stories lacking end-to-end tests and increasing quality issues from the front end user perspective.

Figure 3: The setup in which work the team developing the main application under study within this article.

The application at hand

As pictured in the figure below, the application at stake is of a medium complexity. However, it encompasses several components that make it interesting from the functional testing point of view. Far beyond being only a data driven web application, developed upon a classic Java/AngularJS stack, its ecosystem relies on external components: a proprietary web platform (ServiceNow, used by the backend analysts), REST services, two factors authentication (2FA), emails, QR codes. The data volume and the richness of the underlying architecture also offer challenges in term of response time and volume.

Many aspects of this application are of course covered by different types of tests, but we shall now focus on the browser oriented end-to-end tests implementation.

End-to-end testing technical flaws

Let’s address the most common arguments faced by end-to-end testing. Although those criticism are often based on actual observations, there are some practical approaches to reduce the burden.

e2e tests are hardly maintainable

- Criticism: “Web pages structure continuously evolves, thus an element defined at one position will certainly move around” - Answer: “use a robust way to describe element positions”

With protractor, an element can be located by id (by.id), css (by.css), xpath (by.xpath), button (by.buttonText) or way more other options, often coupled to the underlying AngularJS implementation.

The common pattern is to inspect an element via a browser developer tool, copy its description anchor and paste it into an appropriate locator for action to take place (e.g. check the content of a field, click on a button, enter a value in a textfield etc.).

Although this method produces the correct behavior, the path returned by the developer tool is often intricate:

element(by.xpath(’//*[@id="whatever"]/div/div[7]/p[1]/button[2]’)).

The other extreme might be too simplistic:

element(by.xpath(’//*[contains(text(), “submit”’)).

It is more robust to use a minimalist combination of protractor locator functions, better describing the intention. One shall use an id, if available:

element(by.id(‘whatever’))

Selectors can also be nested, e.g.:

element(by.id(‘whatever’)) .element(by.css(‘div.tool-bar’)) .element(by.buttonText(‘submit’))

Your fellow developers will certainly be thankful for the extra readability and sustainability.

- C: “XPaths and location descriptors are all over the test files and any refactoring is a nightmare” - A: “use page objects to have a single location to describe your page”

Instead of spreading locators all across your test files, use page objects (cf. ThoughtWorks and Martin Fowler posts). A web page, or part of a page, is associated with a business oriented object. Therefore, instead of having:

element(by.id(‘whatever)) .element(by.css(‘div.tool-bar’)) .element(by.buttonText(‘submit’)) .click()

One can write:

const page = new MyPage(); page.submitToolbar();

And having the submitToolbar() function deported once for all into the MyPage object definition.

Serenity, another framework on top of Selenium (another well-known GUI test framework), provides such a feature with PageObject:

@DefaultUrl("http://localhost/whatever")

public class WhateverPage extends PageObject { @FindBy(id=”submit”) WebElementFacade submitButton; public void submitToolBarForm() { submitButton.click(); } }

It is then easy to open and manipulate this page, from anywhere:

WhateverPage page = switchToPage(WhateverPage.class); page.open(); page.submitToolBarForm();

Although writing tests is like writing any piece of code, one shall not forget a couple of good reflexes:

- use naming convention, like getXXXButton(), getYYYTextfield() or findAllZZZTableCells();

- write a simple spec upfront to assess that your page object is up to date with the actual tested page. Having the corresponding test fail early will make analysis easier.

- C: “Tests output are unreadable: how can I know the reason why a test did fail?” - A: “Use tailored matchers and meaningful messages”

Matcher functions compare an evaluated value with an expected one, making a test fail if the condition is not met. For examples:

expect(myArray.length).toBe(42); expect(email).not.toBeUndefined();

A first improvement is rarely used: it consists in adding an extra comment parameter, that will provide more context in case the condition does not pass. For example, the values actually stored in myArray:

expect(myArray.length).toBe(42, ‘myArray should contain 42 values but contains: ‘ + myArray);

Jasmine is the underlying testing framework. It provide default matchers, but those are limited. Thankfully, the library can be extended with the versatile jasmine-matcher library. The following example will fail with the appropriate message whether myArray is not an array or not of the asserted size:

expect(myArray).toBeArrayOfSize(42);

Many more matchers are available, even though you may need one which is not present there.

- A’: “Create your own custom matchers”

For example, you want to compare array of time strings, but you want them to match within a 5 seconds window. If the comparison has to be done once you could write a suite of assertions:

- check the returned variable is an array;

- check the sizes of both arrays are the same;

- check the return variable contains a string in a meaningful time format;

- check one by one the values to see if the delta in seconds is lower than 5.

However, your actual business test becomes intricate, and hardly reusable. Fortunately enough, it is possible to create your own matcher, leveraging lodash and moment.js libraries:

jasmine.addMatchers({ toBeEqualTimesWithin5Sec: () => { return { compare: function(actual, expected){ //do we have an array? if(! _.isArray(actual)){ return {pass: false, message: ‘is not an array: ’ + actual); } } //Are arrays the same size? if(actual.length !== expected.length){ return {pass: false, message: ‘sizes are not coherent. Actual:’ + actual.length + ‘ vs expected: ‘ + expected.length }; } //Does the array contain time strings? //Are both arrays times close enough? … //finally return true return {pass:true, message: ‘dates are aligned’}; } });

And your test will simply become:

expect(myArray).toBeEqualTimesWithin5Sec([‘12:34:55’, ‘15:42:00’]);

Finally, a matcher should come with it own test suite to assert its completeness as well as to document its behavior. And never forget: “Always code as if the guy who ends up maintaining your code will be a violent psychopath who knows where you live. Code for readability.” (John Wood, 1991)

e2e tests are slow

Frontend tests are notoriously slow. The causes are both based on the actual browser rendering and library performance. It is not uncommon to see real life situations diverge, with full suites taking up to 9 hours to run! However, the burden can be reduced.

As tempting as an “improvement” idea may sound, one shall always remember that any optimisation effort shall be guided by upfront measures.

- C: “Searching elements in the DOM using xpath is very slow” - A: “Use id attribute. Finding by id is much faster”

Browsing the DOM seeking for a particular element is slower than using css selectors, itself slower than retrieving an element with its id. Here are the three ways to achieve the same action:

element(by.xpath(’//*[@id="bar"]’)) // based on evaluate element(by.css(‘#bar’)) // based on querySelector element(by.id(bar)) // based on getElementById

But the last one is nearly 60% much faster than css selector and 100% faster than xpath! You can test by yourself here. Furthermore, id tends to be more stable in time than style selectors or xpath, which can change according to business goodwill. And if you’re not convinced yet, while getElementById is generally over optimized on all browsers, xpath is not always well implemented, leading to different behaviours on different browsers. So always prefer the id over the other solutions.

- C: “Tests are invaded by browser.sleep(nnn) statements, slowing down any test suite” - A: “La programmation sans sleep!” (“Programming without sleep”, but that sounds better in french…)

browser.sleep(3000) will make the browser hold for 3 seconds. Such calls are often made to wait for an element to be visible. Although the solution seems appealing, it soon clutters the whole code, as the timings set into the sleep functions try to capture the worst case scenario.

Using JavaScript promises is the default way to execute a piece of asynchronous code and return the result (or an error) as soon as it is completed. Protractor makes a comprehensive use of the feature. For example, element(by.id(‘my-id’)) will not return the element itself, but a promise to be completed. If we have to wait for a dynamic element to be displayed, we can write a utility function that will return a promise with the element, waiting first the element to be present, then visible.

const waitForVisibleByLocator = (locator, delay) => browser.wait(() => element(locator).isPresent(), delay); .then(() => browser.wait(() => element(locator).isDisplayed(), delay)) .then(() => element(locator)); And the function being called in a test: waitForVisibleByLocator(by.id(‘my-id’)) .then((el)=> // assertions);

- C: “My tests require to swap among a list of logged in users, but each login step slow down the whole execution. What can I do?” - A: “Use one browser instance per user.”

Some suites require logging in back and forth between different users. If the login process is not smooth (e.g. your in house application commonly take up to 15 seconds to login), most of the time is spent on the login page.

Although speeding up the login process can be a sound action, it might also be hard, depending on legacy systems and of little end user value (“my users login once a day on the application and stay in for the full working day”).

To tackle the issue from the testing side, it is for example possible to attach on browser per user name, which shall either exist and be logged in at once, or not yet be alive and thus need to be created. For example:

browsers[user.name] = browser.forkNewDriverInstance();

- C: “I have many specs files and Google tells me protractor can execute them in parallel. Why isn’t that so simple?” - A: “That’s a route, but beware of dependencies and concurrency issues.”

By default, it is possible to configure protractor to parallelize tests. For example, to spread a suite over 4 browser instances, one can configure:

{ shardTestFiles: true, maxInstances: 4, browserName: chrome }

However, a perfectly decoupled world is not always reached and several issues can raise:

- design without dependencies can be hard (imagine testing email change, password edition and 2FA token reset in parallel for a single user);

- on the login, some 2FA token for a given user can be used in concurrent sessions;

- scale up is hardly linear, and will at best be limited by the time of the longest suite.

Of course, all theses issues can be addressed by a careful applicative architecture, extensive use of mocking or manual decoupling of the full test set into functionally independent suite ran concurrently.

- C: “Are there other ways to speed up the overall e2e testing process for Continuous Integration?” - A: “Definitely yes, but tradeoffs are unavoidable”

Yes and there are no limits to your imagination. However, beside the points mentioned above, there are a few common way to decrease the feedback loop latency for a low cost:

- as tempting as it might look to measure testing quality by the number of tests, try to limit them: don’t assert the same mechanism in different test;

- Use different test suites (smoke, perf, total etc.) with lower functional coverage but with shorter running time: a fast suite can be ran continuously, while the more demanding one are executed only twice a day.

e2e tests are flaky

- C: “My tests are flaky and hardly reproducible. WTF?” - A: “Sort out root causes and use protractor-flake****”

The topics is not new, and as Simon Steward stated in his 2009 blog post, “It's very seldom because you're uncovered a bug in the test framework.” If having incremental tests (each action is companioned with an assertion) certainly helps to nail down the problem origin, the root cause can lie in external services or other unmastered dependencies.

The idea of running the failed tests a second time is not the most appealing, from an intellectual point of view, but it might solve those type of problems. Protractor-flake is a tool that will conveniently relaunch the failing suites only:

protractor-flake --max-attempts=3 --color=magenta -- --suite=all

NB: protractor-flake needs the terminal reporter to be activated, dumping the stack trace to work.

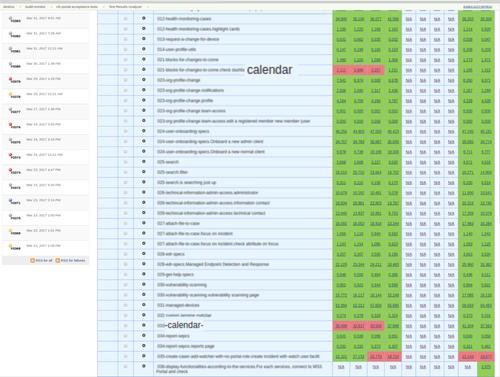

- C: “How can I grasp a global picture of which tests are repetitively failing?” - A: “Use Jenkins test-results-analyzer plugin”

End-to-end tests are not instantaneous to be run, and the whole suite is typically executed once or a couple of times a day on a Jenkins server. It can be handy to compare through time which tests have succeeded or not. The popular test-results-analyzer plugins addresses this issue, by offering a time based comparison, as shown in the figure 4. One can immediately see that two tests have been failing over the last three executions, and both their names refer to “calendar”. This information, joined with the fact that they were executed at the beginning of a month, naturally points out the cause.

- C: “My application should be tested on multiple browser, but it appears not to work as easily as in the book. WTF?” - A: “Head up soon to multi browser”

Via the multiCapabilities option, protractor offers the possibility to test multiple browsers. As it looks so trivial, the feature is often disregarded for faster test execution (“it’s trivial, we’ll activate it later, when we’ll be in production”).

As trivial as it may seem, slight difference exists between browsers (e.g. the TAB character might be sent after filling a text field in some firefox version). The situation can be expensive to solve if the multi browser capability is add only once hundreds of test have been written and some of them failing repetitively for unexplained reason.

The solution is of course to activate the feature early in the development process, even though it will not be called upon at every test execution.

And so much more can go South with e2e testing!

- C: “Can I deal with two factor authentication with google token?” - A: “Yes, to a certain extent”

Two factors authentication (2FA), with a time/seed based token associated to a classic password has become a de facto standard. The second factor is initialized at once with a random seed (typically exchanged via an email and a QR code) and usually generated for login by a smartphone.

With these channels, the login process has therefore become more complex to hack, but also to automatically test.

However, if the second factor is for example based on the classic time-based one-time password algorithm, such as the one used by Google, libraries are publicly available with an implementation.

- C: “Some processes, like profile change or password reset, require email interactions. Is it possible to include them in the tests?” - A: “External interactions can take place, although it comes with a price.”

A real system comes with emails exchanges, QR code images etc. Thanks to libraries available on npmjs.org, it is possible to scan a mailbox, or decipher a QR with an equivalent to a cell phone scanner.

However, such connectors come with a price at development time, at execution time (think of allowing delays for emails to be delivered), and stability risks (the more dependencies on external systems, the more failures points.)

- C: “How can I interact with a third party's web application, hardly written with e2e testing in mind?” - A: “Write an independant (tested) library to interact with, via a REST API or browser navigation.”

In one of the underlying example to this article, the custom application is strongly dependant on the service management enterprise software ServiceNow. To fully test the in house web application, some activities must be handled through this third party web application.

If no REST API is available, one can also use protractor driver to navigate and simulate analyst's workflows. The goal is not to test it thoroughly, but to have the minimum required interactions. An independent library is then to be written to interact with it.

As the third party solution can be upgraded and changed without prior notice, some tests are also to be written to harness the stability of your library.

The methodology perspective

Until now, we have covered vast lands of technical challenges to overcome the end-to-end testing of a complex solution. If we believe that we can push the frontier of testing, it is also important to take into account design and methodology. Testing shall not be done in an isolated box, it shall be conducted hand in hand with the development process. This journey is a matter of design choices, team organization and methodology.

Design for testing

“A software system can best be designed if the testing is interlaced with the designing instead of being used after the design.”

A.J. Perlis NATO Software Engineering Conference, 1968

- C: “As a test developer, I face so many different manners to locate elements on a web page. How could we build a more consistent experience?” - A: “Define guidelines, improve then and include e2e testing early on the functionality development.”

We have seen in the previous sections how little browser manipulation are out of reach of Protractor. But being able to do any thing is not a reason for willingly doing anything. The test source code must be coherent, modular, light, readable and sustainable.

Coding guidelines shall take into account the testing perspective, writing for example id attributes in camel case, or applying a predictable logic for naming classes. Based on observations, JavaScript code lack the culture found, for example, in Java. Tools, such as jslint, can be used in continuous integration to ensure that some rules are followed by the team. However, communication among developers and a minimalist documentation remain the most efficient channel.

- C: “Sometimes tests are hard to write on a feature and the user story exceeds its initial estimation.” - A: “Guess what? Embed the testing perspective in the estimation!”

As obvious as this point may appear, tests are scarcely evaluated during a User Story estimation (Tres amigos or spring planning). A simple question shall be ask when planning: “do we foresee any particular challenge to test this feature? How much do we think is can cost?” This question can stimulate a discussion on the story from an original perspective and shake design choices..

- C: “Our designer followed some business requirements and have built pretty custom UI widget for option selection or a phone number input field. How can I test those non standard widgets?” - A: “Don’t reinvent the wheel and share the testing costs with stakeholders.”

“Let’s imagine a super cool new widget to enter a phone number. Based on the country code, it will automatically format the text string, following any fashion one can enter her number. What a cool way to enter a phone number we are going to invent!” Fueled by imagination and positive energy, new widgets are drawn on the board, that will “revolution their user’s experience”. Designer are hired to shape those widgets, often outside the development team. The latter will be then in charge to embed the revolutionary components into the application, test them... and fix them.

And then, rules reveals to be more complex than first thought, bugs appear, execution is slow. Unexpected behaviors happen when copy/pasting or typing to fast… And a simple phone number input field has burned 6 weeks of work, to be seen once in the whole registration process.

Beside a poor evaluation of cost/value, we see with this example how the testing has to be part of the initial widget design and not be relegated to an afterthought. And how the “Keep It Simple, Stupid” principle be grounded by a testing perspective.

Shortening the feedback loop

- C: “Even with all the above principles in action, how can we make the e2e suite run faster?” - A: “The fastest test is the one which is not executed.”

Throughout this article, we have see many hints and tips to make web e2e tests run faster. Without reducing the actual testing coverage, there are a couple of major directions where huge savings can be claimed:

- beware of the ice cream pattern (fig. 2)! Features shall be tested by unit and integration suites wherever possible, as they cost less to write, easier to refactor and can be order of magnitude faster to run. WIth the same idea, do not use e2e tests to assess underlying REST API.

- Don’t Repeat Yourself! Once more, a software craftsman motto can be applied to writing tests. Although it is tempting to copy/paste test suite files, only to make a few partial changes, it will prove to be costly in the longer term. A more insidious pattern is to test the same component, derived through multiple pages.

Although these two advices might seem obvious, experience shows how thes anti-patterns are repeated again and again in multiple projects.

- C: “What shall we test?” - A: “Use a comb coverage”

Obviously not all workflows, with all their detours and particularities, can be explored by functional tests. They would become too expensive to build, run and maintain.

The “comb pattern” strategy consists in:

- Aiming for the largest low depth testing, where for example all pages are at least assessed for their main component presence.

- Diving deeper for the most business critical web site areas (e.g. the web registration process, where customer shall not be lost at any cost) and try to cover at least once every meta-component or workflow types.

- If a bug appears in acceptance or production, the opportunity of an e2e test shall be assessed (the feature was not as trivial as the developers first thought).

Team Organization

Figure 5: an organization segregated by responsibility (up) is a reason to have slow feedback loop and ultimately long TTM. If Dev/Ops proximity is well accepted nowadays, testing culture shall also be shared among the team (down).

The last topic of this article, but maybe the most important one: shaping the team to make testing be part of the software development cycle, from idea to release.

The boundary between development and operation has been blurred by the DevOps terms (or if it has not been blurred in your IT department, at least the buzz has certainly made its way through). Sadly enough, a wall still exists between “developers” and “testers” in many organisations, where the later only to access to the level of the former.

“You build it, you run it!” said Amazon CTO Werner Vogels. And what about “you build it, you test it”?

In a small team delivering a working software through short iterations, the developer work is by essence versatile. If no one contest that unit testing is done on par with feature implementation, by the same person (or pair), why the end-to-end testing should be pushed out of the cycle? Why should it be sent to junior developer or outsourced to the other side of the world?

We believe that e2e test development are to be a crucial part of the agile team, with any skilled enough member taking her share to the effort. The full testing pyramid then becomes a team responsibility, bugs are more seldomly filed as “defects” and the final product quality is to be pursued as a whole.

Heading Further

- Alexander Tarlinder, through his book “Developer Testing: Building Quality into Software” or a podcast interview on IEEE software engineering radio

- “Agile Testing: A Practical Guide for Testers and Agile Teams” by Lisa Crispin and Janet Gregory