Edge Computing : learn to delegate

While the interest for the Internet of Things by companies is no longer to be proven, this area continues to give a hard time to experts, as for security and architecture. Indeed, the multiplication of data sources brings a reflection on the architecture of networks. As Satya Nadella (Microsoft CEO) said on stage in 2017: "When I joined Microsoft in 1992, all Internet traffic was 100 gigabytes a day. Today is 17.5 million times that amount ... per second! "And we are only at the beginning of this exponential increase. This is how debates emerge on the interest of setting up an architecture where the data will be rather exploited on the Cloud (or more generally in a centralized way) or as close as possible to the end nodes of the network.

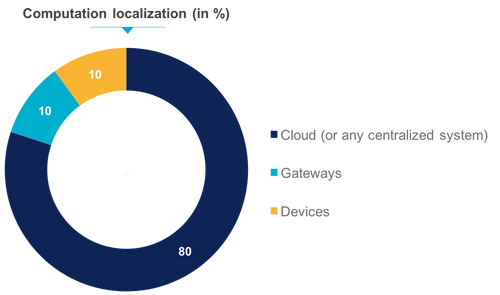

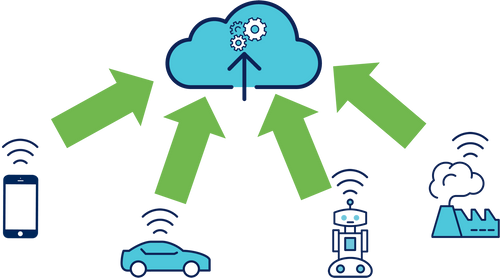

In a Cloud Centric architecture, the computing capabilities of remote virtual machines are maximized to provide the end user with new services. However, when data sources start to become extremely numerous (IoT devices, smartphones, third-party applications, etc.) the network tends to congestion and the low latency required for IoT applications will no longer be respected.

We therefore observe the emergence of Edge computing. According to HPE, Edge Computing is a practice of processing data near the edge of your network, where the data is generated, not in a centralized data warehouse. We can then wonder if the Cloud and its enormous computing power will disappear in favor of lighter servers that will only redistribute data to end users.

Let's take two sufficiently revealing examples to highlight the differences of these architectures:

- Devices located on all the street lamps of the city of New York (~ 250 000). Imagine that these devices are equipped with a light sensor and a motion sensor.

- 4K surveillance cameras in a city like London (~ 500,000). Imagine then that we would like to store the video stream only when the camera detects motion. We would also hope that when the camera recognizes the face of a person wanted by the police, an alert is raised to allow to geolocate the person.

NB: Yes, it seems that there are twice as many cameras in London than streetlights in New York ...

The architectures

The centralized architecture in the Cloud

In this architecture, all the nodes of the network will speak live and at regular intervals with the central node located in the Cloud.

In the first example, the street lights will transmit to the Cloud, every second, the ambient brightness and if they noticed a movement. The cloud can then for example extract from the raw data of each street lights, the value of the brightness. Finally, by comparing this data with the thresholds it knows, it can transmit to the relevant streetlights the ignition control. However, the strength of the Cloud lies in the fact that it is quite simple to go further.

Let's say the New York City Hall realizes that turning on streetlights (even with LEDs) when the ambient light is dimming is not enough to save energy. Since the devices on the street lamps have motion sensors, it is sufficient to extract the motion signal from the raw data in order to light the street lamps only when a movement has been detected.

This avoids lighting an area where there is no one. This improvement is quite simple to implement because the motion data is already sent to the Cloud which has only to extract and exploit it.

However, given the number of devices and events (250,000 / s), it is quite difficult to believe that the ignition control resulting from the processing of each of these raw data is instantaneous. During a short period of time, the raw data of each device must traverse the network, be filtered, stored and analyzed, and then an ignition command must be sent or not. At this scale, there will necessarily be a latency not insignificant.

In the second example, the 4K video stream of 500,000 surveillance cameras must be sent continuously to the cloud. We realize that neither the network nor the infrastructure can support this burden. In practice, we use gateways that will serve as buffers and then will in turn transmit the data they have stored. Once the data arrives on the cloud, they can be analyzed finely to determine for each camera if a movement has been detected. If so, it remains to record the flow as well as determine if a person sought by the police has been detected and transmit his position.

The Cloud is therefore able to perform a fairly complex processing on large data and if by chance, the London mayor no longer wanted to rely on a movement detected in the image to record the flow but on schedules, this change would be enough simple to implement.

In this case, it is even more obvious that the latency will be extremely important compared to the travel time of the data and its processing which will be the result of quite complex algorithms (Computer Vision, Machine Learning ...).

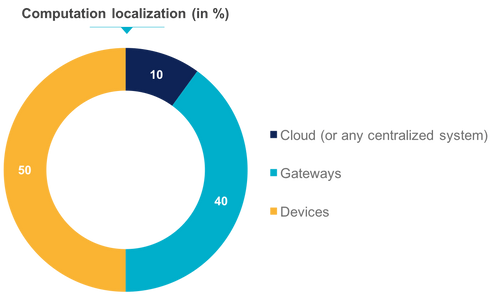

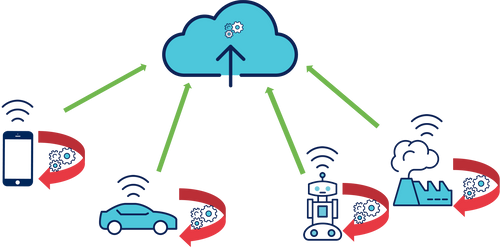

Distributed architecture (Edge computing)

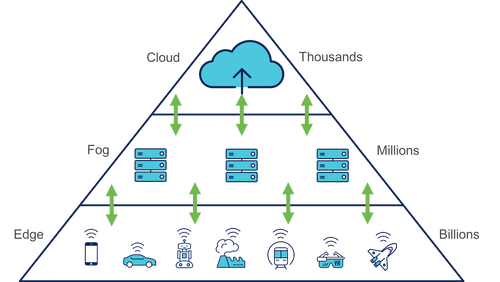

Here, the Cloud receives only the results of the calculation steps performed by the network ends and can in turn perform other calculations or simply serve as a relay to end users. Delegating work to devices on the periphery of the network makes it possible to reduce the size of the data to be sent and thus to decongest the communication channels in order to reduce the latency. This also helps to reduce the load on the server. The job of a device maker is to find the balance between the battery life, the computing power of the device and communications. Today, we are able to equip more and more computing power because these components are less and less energy consumers and prices become affordable.

In the first example, the street lights could for example locally store the brightness thresholds from which they would themselves decide to turn on or off. We thus see here a first constraint of the Edge Computing architecture. Indeed, imagine that for one reason or another, we wanted to change the brightness thresholds. The solution is to set up a centralized device management system with the ability to send data to devices. This system must be located in the cloud and could also allow to configure different thresholds for each lamppost if desired. The treatment is no longer done remotely, but locally. Only the configuration and business rules are periodically deployed from the cloud.

Let's go a little further. We can decide to delegate the motion detection to the device located on the streetlamp which could then decide alone to turn it on or off and that will only have to transmit its state (only when it changes) to the centralized management system. This adds an additional security constraint. In the cloud-centric architecture, the device sends raw (potentially encrypted) data on a regular basis, which is then analyzed before commands descend through secure communications. If the device is corrupted, it will simply stop receiving its commands and become unusable. A simple intervention will then put it back into its normal operating state. In the case where the device has calculation capabilities, decides itself when it lights up or not and only sends its state when it changes, if it is corrupted, the attacker can then decide to implement a totally different behavior without this being noticed by the centralized management system. Of course, when the only hardware available is an LED to turn on or off the consequences of an attack are not dramatic but let's move to our second example.

City of London cameras can, in this distributed architecture, detect a movement in their field of vision and start the transmission to the Cloud that can automatically record the flow. They can also make face recognition in the image and thus raise an alert automatically when a face corresponds to that of an individual they have in memory. We realize here the interest of this kind of architecture because the cameras are no longer obliged to stream content 4K continuously but only occasionally. The Cloud is not even obliged to process the image to recognize someone because the cameras take care of it. However, it is here that the notion of security becomes important. If a camera that only transmits its streaming video is corrupted, the attacker will have access "only" to this stream that he can exploit himself. In the case where the camera is more autonomous, its takeover could remove a face from the base, add more, not allow the Cloud to detect its malfunction because it no longer transmits continuously.

These constraints are not blocking and quite simple workarounds exist, but we must be aware that adding "smart" nodes in a network can increase its sensitivity.

Conclusion

Then try to extract recommendations from these observations. If the amount of connected devices increases as expected, the first factor to regulate will be network congestion (and of course security).

Edge computing is an extremely effective solution for dealing with this problem. The components that make the calculations are increasingly affordable (ex: ATtiny, 8-bit processor 20MHz <3 €) and less and less energy consumers. It is therefore possible to deport certain calculations to the ends of the network to unclog it by sending only the most relevant data.

However, this trend does not eliminate the interest of the Cloud which must now dedicate its computing power and its high availability to device management interfaces and services requiring a very high computing capacity (registration of devices, sending of command, configuration management, predictive maintenance, ...). In the case of Machine Learning, the learning phase is done on the cloud from large databases of knowledge but the real-time processing based on model generated remains local.

There is also a need to be more rigorous about security in this kind of architecture because there are more flaws in a network that has more and more intelligent nodes. When the raw data is sent to the cloud, it is quite simple to implement new services by filtering this or that data. When delegating processing to the devices, the Cloud receives only the result of this treatment and then you have to keep your hands on the devices to make a change (update, two-way communications, ...).

Another solution is to use gateways that serve as a connectivity aggregator and translate IoT protocols to more standard protocols or to encrypt or decrypt data. In this case, these gateways may also perform calculation and processing steps to reduce the amount of data to be processed by the IoT objects. These gateways are called the Fog Computing layer.

In short :

- Edge computing helps to unclog networks and thus limit failures.

- Lowering the load on the central servers also reduces latency.

- In the event of a network failure or unavailability of the centralized system, the devices may continue to operate autonomously until they return to normal. We are talking here about the resilience of architecture.

- Data that ultimately reaches the cloud is not very sensitive and encrypted.

- When we distribute the resources in an information system, we multiply the attack vectors and we increase its vulnerability to attacks.

- A powerful device management system must be in place to manage their status and update remotely.

- Embedded firmwares must be able (if necessary) to communicate with each other without the intermediary of a smarter flat pass that would serve to synchronize and archive these exchanges.

The ideal architecture would therefore be:

- More and more autonomous and intelligent devices without any impact on battery consumption

- A centralized system with a high computing power which is exploited for the implementation of very complex algorithms and for the remote management of the equipment park

- A sufficiently robust and fast network to provide reliable communications without latency that would impact the end service

If you want to make the most of the opportunities offered by IoT, learn to delegate.