DockerCon 2016: "Nobody cares about containers!"

docker run busybox /bin/echo 'hello boys and girls!'

As humans, we like new shinny things. And working in a wannabe devops world, that means solving problems with containers, too. We have been working with them for quite a while now, and we are more than happy with the results. We like them so much, that we decided to travel more than 8000 km, to Seattle, Washington, in order to go to this year’s DockerCon.

So if you’re into containers as well, and you’re dying to hear the hot news, you’ve come to the right place. Fasten your seatbelts, and enjoy the ride!

Docker and the future: What’s coming up?

Let’s talk about numbers first: During the first keynote, Ben Golub (Docker CEO) was all about exposing Docker’s exponential growth in every single aspect: from DockerCon’s size (five hundred attendees in 2014 vs four thousand in this year’s edition), to the amount of containers downloaded up until the conference (4.1 billion).

- 986 persons have interacted with the repository, with a 300 pull requests per week on average:

- median time to process is less than one day

- mean time to process is 6 days

- 90% of them are processed within 20 days of submission

- 800 paper submissions for this year’s DockerCon

- ⅔ of contributions to the Docker Engine are external

- 250+ meetups held all over the world, with more than 125k+ participants…

Anyway, you get the point: Docker is pretty big nowadays.

Docker development is driven by its userbase needs. This means that most of its new features are either solutions to common community-wide problems or tools intended to be catalysts for enterprise adoption.

Based on this feedback, the guys at Docker came up with three main topics for this year’s keynotes :

A seamless user experience from the developer perspective

Built-in orchestration

Integration with operations-oriented cloud resources such as AWS and Azure

We’ll discuss each one of these subjects right away. One order of coolness with fries, coming right up!

Developer’s experience: Introducing Docker for Mac and Docker for Windows.

Solomon Hykes said it himself: “nobody cares about containers”, and he’s right. Docker’s main focus is to make life easier for everybody, both for developers and operations teams, by eliminating friction in the development environment. Let us all become developers for 30 seconds here: I don’t want to install 27 different tools before I can start coding stuff. Basically, I need a tool that helps me, while getting out of the way at the same time. I don’t want to be worried about the internal gears of this thing : simplicity is the key.

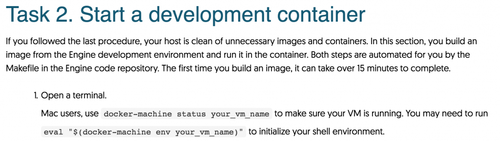

This wasn’t the way Docker used to work on OS X. It wasn’t simple. At all. First you had to install VirtualBox and then rely on a Boot2Docker VM, since OS X isn’t Linux, right? So you were running the Docker client natively on your Mac, but your Mac wasn’t the Docker host: Boot2Docker was. This process got simplified with the creation of Docker Toolkit, which was a single package that contained the Engine, Compose, Machine and Kitematic. It was an improvement, you were still a bit uneasy about all these different tools in your machine. Why do I have to `eval $(docker-machine env seb_cool_vm)` each time I want to work with Docker? Graaaahhhh.

You could even see it on the official documentation

What about now? Well, you can just go ahead and install Docker for Mac, which runs as a native Mac application, and uses xhyve to virtualize the Docker Engine environment and Linux kernel-specific features for the Docker daemon. There’s similar support for Windows as well. So you’re still using an hypervisor to run Docker on your machine, but you don’t have to interact with it, or worry about it at all. This is seamless. It helps you, then it gets right out of your way. So how did they pull this off? They hired a lot of people, both directly and by acquisition. They bought Unikernel Systems, for a start. They also hired a lot of people from the videogame industry, as they were “five years ahead of everyone else” in terms of exploitation of IT systems.

Docker’s built-in orchestration

Basically, Docker is a tool we can use in order to build and run immutable “boxes”. That way, you can ship applications without having to worry about the deploying environment, since everything that the application needs in order to run already exists inside the container environment. That’s already remarkable.

Still, application deployment is a recurrent issue. It is usually painful. How do I deploy an application that needs to be replicated multiple times? How do I notify my load balancer when scaling up or down? How on earth can I update the application version inside the containers, without downtime?

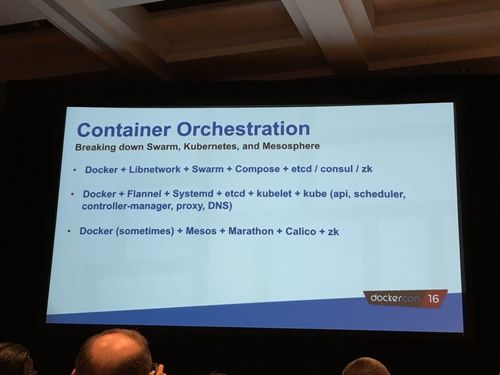

You could find answers to these questions with systems like Kubernetes, Mesos running Marathon (even if it’s not container-oriented by design) or Docker Swarm. They all fall into the “container orchestrator” category, and we also use the CaaS (Container as a Service) acronym to describe them.

Using a Swarm cluster you can deploy containers by notifying the whole cluster that it has to run them. Combine that with Docker Compose, and you can reduce the deployment effort drastically. Up until now, you still had to deal with load balancing notifications or perform all the rolling updates by yourself. That was tedious and complex, and as Hykes himself said it: “[this] is an expert thing”, meaning that you could either hire an army of experts, or get another company do it for you.

That’s no longer the case with Docker 1.12 and its built in orchestration. The main goal of this release is to make orchestration really simple and manageable for everybody:

Swarm mode: Every Docker Engine can link up with another engine in order to form a swarm, and therefore self heal, run containers and attach volumes, all in a distributed and fault tolerant way. It is also decentralized in the sense that any node can become a swarm manager or a node at runtime. No external consensus datastore is needed (no need to install etcd).

TLS/Cryptographic node identity: Each node that joins the cluster has his own certificate, generated by a Certificate Authority inside a Swarm Manager node, with a random ID, and their current role in the swarm. Every node in the swarm is therefore identified with a cryptographic key during his participation within the cluster. Any action within the cluster can be traced back to the node. And each node also rotates his certificates, so compromised certificates are no longer valid after a rotation. That’s literally PKI out of the box.

Docker “Service” API: You can now tell your swarm the desired state of a service, and then just let the magic work. A swarm cluster will handle rolling updates, scaling, advanced scheduling, healthchecks and rescheduling on node failure.

Routing mesh: Overlay networking, container native load balancing, DNS based service discovery, all with no separate cluster to setup. It also works with your existing load balancers and rock solid kernel only data paths with IPVS.

You can see that there are new abstractions, like services for example, that already existed in other orchestration tools, like Kubernetes. It’s good to see that the whole “container orchestration” ecosystem is converging to common abstractions that make sense.

All of these features were demonstrated during the keynotes, but we setted up a quick demo so you can try it yourself. We will be creating a 3 node cluster using Docker Machine 0.8.

First, create your 3 nodes using docker-machine:

docker-machine create -d virtualbox swarm-master

docker-machine create -d virtualbox swarm-node-1

docker-machine create -d virtualbox swarm-node-2

Note the ip address of the manager node:

docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS

swarm-master - virtualbox Running tcp://192.168.99.100:2376 v1.12.0-rc2

swarm-node-1 - virtualbox Running tcp://192.168.99.101:2376 v1.12.0-rc2

swarm-node-2 - virtualbox Running tcp://192.168.99.102:2376 v1.12.0-rc2

Now, access your swarm node:

docker-machine ssh swarm-master

And then you just have to initialise your swarm:

docker@swarm-master:~$ docker swarm init

Swarm initialized: current node (1uj1fqtzz7whgz97mbwcpiki8) is now a manager.

Afterwards, join the swarm cluster with the swarm nodes:

docker-machine ssh swarm-node-1

And then:

docker swarm join $MASTER_IP_ADDRESS:2377

docker@swarm-node-1:~$ docker swarm join 192.168.99.100:2377

This node joined a Swarm as a worker.

You can follow the same procedure for swarm-node-2... and that’s it! You now have a working swarm cluster. You can verify it from your manager instance:

docker@swarm-master:~$ docker node ls

ID NAME MEMBERSHIP STATUS AVAILABILITY MANAGER STATUS

1uj1fqtzz7whgz97mbwcpiki8 * swarm-master Accepted Ready Active Leader

2mryx3xbz7z7krhdaqtq5o8lu swarm-node-2 Accepted Ready Active

9lwaccv0qezyki3ho4rtwcw6j swarm-node-1 Accepted Ready Active

Let’s run a service on our newly created cluster:

docker@swarm-master:~$ docker service create --replicas 1 --name nginx -p 8080:80 nginx:1.10

cyhu3cztvjs4llpj7ewy9pobh

Now we can see the nginx welcome page on “http://localhost:8080” on any node in the swarm (note: according to our tests this feature is not stable yet). Awesome, right?

Right now our service only is running on one node, but we can scale it up by running:

docker@swarm-master:~$ docker service scale nginx=3

If you run a `docker ps` on either swam-node-1 or swarm-node-2, you will see that a container is running on each one of them. The nginx welcome page will now be served alternatively by each container. By doing this you specify your desired state, which will be enforced by Swarm from now on.

Now let’s assume we want to update the version of nginx to 1.11, we simply run:

docker@swarm-master:~$ docker service update --image nginx:1.11 nginx

This command will perform an update on all three nodes simultaneously, and will cause a small downtime. If you want to perform a rolling update instead you just have to add the `--update-parallelism=1` flag (which specifies the number of containers that are updated at the same time) and the `update-delay=2` flag (time interval between two updates).

Swarm mode also reschedules your containers on node failure. For instance, if you shut down swarm-node-1, you will only have two nginx containers running on your cluster, when you actually specified that you wanted three of them when you scaled your service. Therefore, the engine will launch a new container on either swarm-master or swarm-node-2 in order to abide to your desired state.

This is pretty much the definition of a tool that “gets out of your way”. Note that Docker for Mac/Windows already uses Docker 1.12. The beta is now open, so go ahead and try it !

Last but not least, Docker announced a portable format for multi-container applications: the Distributed Application Bundle (or DAB). It is built from a compose file. Once built, you just have to pass it to your swarm cluster and everything’s taken care of automatically. This feature is still in experimental state, but the documentation is already out there, in case you’re feeling like reading something.

Operations experience: integration with AWS and Azure

Docker also wants to improve the experience of operations teams by providing a better integration with widely used cloud providers, starting with AWS and Azure.

This is currently only available on beta, and consists mainly of templates of VMs (AWS Cloudformation and Azure Resource Manager) which can be used to instantiate a cluster on either of these platforms. The goal is to make all cloud provider specific configurations “transparent”: none of the Docker CLI commands must change.

For example, in AWS a service is accessed through an Elastic Load Balancer in front of your applications. This ELB needs to be aware of every backend he has to serve: each time an application scales up or down, the ELB must be notified. Docker integrates with AWS in such a way that when you run `docker service scale <service_name>=x`, the ELB is automatically reconfigured, and is therefore capable of redirecting traffic towards these new endpoints. We don’t know exactly how much time is required by the ELB to reconfigure itself, since we haven’t tried it yet, but it seemed to work quite fast during the demo. Access Control Lists are also automatically generated. The same kind of features are available for Azure too.

Docker in the corporate world: the need for security and simplicity

A recurrent message of this DockerCon is that Docker is for everybody, from startups with greenfield applications, to big non-tech oriented companies with legacy infrastructure. It’s true that Docker makes the deployment of stateless microservices easier (“one process per container”), but it’s also very true that you can benefit from Docker’s immutability and workflow when dockerizing your monoliths. You can also get used to the technology while at it. That way, you can make the transition from a monolithic application to a service-decoupled one in a progressive fashion.

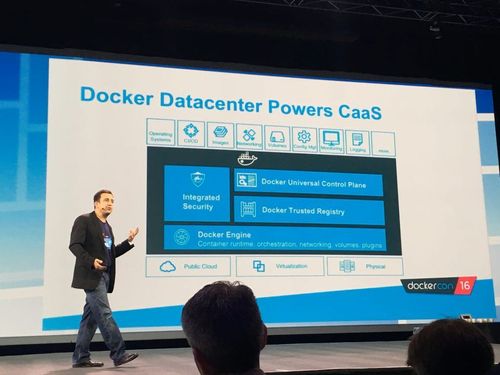

In the past, big non tech companies had concerns about vulnerabilities in images pulled from the Docker Hub, about the difficulty of migrating to Docker when you operate at the scale they do (thousands of apps). This caused a drag in the adoption of the technology, and to address these concerns Docker plans to release:

Universal Control Plane: This actually has been around for a while, but now it provides a better integration with the trusted registry and improved features. It’s basically a GUI on top of a Docker cluster. It centralizes the logs, does metric aggregation and shows resources utilisation. It has multitenancy and group based access control. It’s also capable of deploying DAB bundles which were introduced earlier.

Trusted registry: This has been around for a while too, but now images pushed on the registry will be automatically scanned for vulnerabilities against a database maintained by Docker. This way you will get notifications if you have security issues on your images. Hooray! Be careful though: even if don’t have any vulnerabilities on your images, you can still have security issues within your architecture.

Docker Store: This is actually a public store of images digitally signed and checked for vulnerabilities by Docker, much like the App Store or Google Play, but intended for Docker Images.

We have yet to see if all these new features will increase Docker’s utilisation in the enterprise world, but so far they seem pretty well oriented and functional.

Final thoughts

DockerCon was a great experience. It felt good to meet the american community, and to see that it is growing at a fast pace. It’s good to see that Docker is really listening to its community. Thank you to all the staff at Docker who pulled this off: all your hard work clearly paid off, and was really appreciated by all of us.

Thanks for the party at the Space Needle too! We had a blast!

So, in short:

What we loved:

- All the orchestration giants converge toward similar abstractions.

- Docker’s vision about the seamless experience. Deploying a Swarm cluster is really really easy today. This is actually encouraging competitors to reduce the complexity of the deployment of their tools. For us, it’s a win-win scenario.

- Docker Inc. really listens to its community.

- The support to Hack Harassment. We took the pledge, we encourage you to do it as well.

What we didn’t like:

- We were excited about swarmkit before the DockerCon, and we didn’t hear a word about it during these 3 days. We don’t really know if it’s been integrated to the Docker Engine like Swarm, or if it has been dropped completely as a project. Huh. (Edit 28/06/2016: This point was clarified by Solomon Hykes himself in the comments below. Check his response if you're curious too.)

We will also release a second article containing our analysis of the sessions we saw this year during the conference. Stay tuned!