Docker Q&As

After talking a while with few co-workers about Docker versus LXC and regular VMs, it appeared that a few questions and answers could help people understand the concepts and their main differences.

Q: What are the differences between VMs (hosted on VMWare, KVM...) and LXC containers?

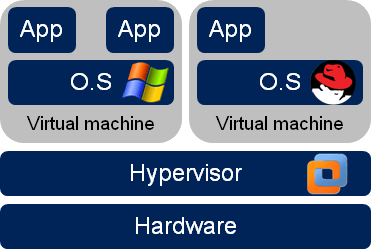

VMs are about running several full guest Operating Systems (Linux, Windows, BSD...) on shared hardware resources. Those OS can be different in each VM and can be different from the host OS. Each OS (kernel) thinks that it's running on its own on regular hardware, given generic or hypervisor-specific drivers.

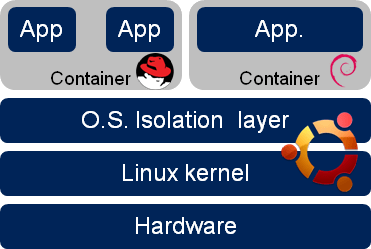

Containers are about resources isolation: processes, file-systems, network interfaces and other kernel resources (shared memory, mutexes, semaphores...) within a single running operating system, given some usage limitation (CPU, memory, iops). Containers are often described as chroot on steroids. FreeBSD jails or Solaris zones/containers address the same goal.

Q: Can LXC containers on the same host run several Linux distributions?

Short answer: Yes. Long answer: LXC containers share the kernel of their host: they can gather kernel informations (physical/virtual, kernel version...) of the host but have their own group of processes, network configuration and filesystem. So, you can start a LXC Debian Container on a LXC CentOS Host: the uname command returns the information of the host kernel (except the hostname) whereas the file /etc/*-release contains the information of the running Debian. In this case, package management systems are RPM for the host and DPKG for the container.

Q: So if containers are nothing but isolation, why can they be compared to VMs?

In Linux world, starting a container with a full-OS-like process tree (init/systemd/upstart, ssh) within a dedicated filesystem, gives you access to a logical isolated system which really behaves like a VM, from a user perspective, you can log by ssh on it on a dedicated IP address, do sudo, ps, top, yum/apt-get install, kill, service apache2 restart. OpenStack, libvirt, vagrant, have now plugged LXC as yet-another-way of providing VMs, even if this term doesn't really fit.

Q: What are the differences between regular LXC and Docker containers?

From a technical perspective, there are only a few thing to mention: Docker is simply an encapsulation Layer on top of LXC, iptables, exposing an API and a command line client to provide value-added features.

Given the used file system stack (formerly AUFS, now devmapper), it natively provides versioning features of the containers file systems. A container is described as incremental changes to apply over a previous container version.

It implements a LXC use case in which you don't start a whole full-OS-like process tree, but only a single application process, or a single application-centric process tree. You have to provide some script to launch your application at startup, or ask a supervisord to do it for you, but you won't be easily able to rely on the distribution native SysVInit / upstart / systemd system startup toolchain.

Note: as today (0.7.x Docker release), Docker is exclusively bound to LXC. It might change in the future releases with its refactoring getting it more pluggable and extensible.

Q: How fast(er) are containers?

At runtime: You are very close to the bare-metal performances. Only a little accountability and quota enforcement overhead apply. At startup: You don't have to start a whole kernel, only fork a process tree. It's significantly faster. If you use Docker, it's even faster because you only have to start the application process(es) you need. Nothing useless!

Q: How about memory footprint?

Memory footprint is only the memory consumed by the running processes. It makes containers so lightweight that it's totally possible to launch many containers on the same host.

Q: What are Dockerfiles?

As Makefile or Vagrantfile, a Dockerfile is text file that describes the way to build Docker image, ready be instantiated as containers. Dockerfile syntax is as of today really simple and contains only few self-explanatory statements (FROM, RUN, CMD, USER, EXPOSE).

# from which image we start

FROM ubuntu:latest

# what commands to run to build the container

RUN apt-get -y install python-software-properties

RUN add-apt-repository -y ppa:nginx/stable

RUN apt-get update

RUN apt-get install -y nginx

# what local file to copy to the container to build it

ADD nginx.conf /etc/nginx/

ADD nginx.sh /

# what network port will be accessible for this container

EXPOSE 80

# what command to launch at container startup

CMD ["/nginx.sh"]

Q: What kind of nestings are possible?

VMs in VMs

It makes sense on a few use cases (for instance when testing deployment of an OpenStack, on top of an OpenStack :)). Performance degradation is really an issue.

Containers in VMs

This is probably the most meaningful use case, mostly if it's a real pain to get a VM from your Ops guys. It allows you to keep the containing VM « clean » and completely de-correlate the life cycle of underlying containers. Container reinstallations can be performed as you like. You might have to play with network tricks like NAT, MASQUERADE, PAT to expose the container services.

Containers in containers

There are no real issues to perform such trick. Hosting container must have LXC elevated privileges to run. Containing depth can be insane, though we don't see any real-like use case at more than two or three levels of nesting.

VMs in containers

Never tried this. Theoretically, only applies for hypervisors that can run on a real Linux Kernel (kvm for instance). We don't see the benefits of this.

Q: What about Puppet / Chef / Salt / Ansible? Does it make sense to use such tools? Inside/Outside a Docker container?

On the host side, it's totally worth it. You can for instance find Puppet modules to manager Docker containers.

On the guest side, things are far less clear. There are basically two steps where config management could be used:

- At build time (to create the container)

- At startup time to finalize the configuration (apply environment specific parameters) before starting the processes

As an example, we could imagine some kind of Dockerfile that uses puppet, in a masterless usage, to do some container setup (build time):

# let's pretend there is a Centos Docker image with puppet available

FROM centos:puppet

ADD conf /etc/puppet/

RUN puppet apply -v -e 'include tomcat7_rhel'

Using such tools at startup time or even during run time might be useful if you have to perform very tricky environment configurations.

We're far from having a real consensus about this. In any case, it implies to split the classes/cookbooks to clearly separate two execution steps, quite a lot of refactoring.

Conclusion

Lots of questions remain yet to answer and we'll try to address them in other posts: local registry usage, configuration management, administration/operations, network, storage.