Docker container's configuration

As we saw in the previous post, Docker basic usage seems pretty simple. Let's try to dig a little deeper into the configuration management.

From an OPS perspective, we can consider containers as application black boxes, without caring about how they are built, and simply use them. However there are several aspects of a container that must be configured to ensure their correct integration in an information system.

Container run-time configuration

It is possible, and thus recommended, to limit CPU and memory usage in LXC, and therefore Docker containers. Docker run command line provides both limitations with the -m and -c options. But we might need to configure the container application itself to adapt its memory usage or processes/threads counts to match those settings.

We'll try to illustrate this by improving the previous memcached example.

Docker provide a simple way to provide configuration options to containers by setting environment variables:

$ docker run -e MY_VAR=666 -t -i 127.0.0.1:5000/ubuntu/memcached:latest /bin/bash daemon@078c7cf85f66:/$ echo $MY_VAR

666 daemon@078c7cf85f66:/$ exit

Now we can adapt our startup script and Dockerfile to use this feature:

# Dockerfile

FROM 127.0.0.1:5000/ubuntu:latest

RUN dpkg-divert --local --rename --add /sbin/initctl

RUN ln -s /bin/true /sbin/initctl

RUN apt-get install -y memcached

ADD memcached.sh /

EXPOSE 11211

ENV MEMCACHED_MEM 512

ENV MEMCACHED_CON 1024

CMD ["/memcached.sh"]

USER daemon

And the memcached.sh startup script

#!/bin/bash

# Default values if env vars are not set

mem=${MEMCACHED_MEM:-64}

conn=${MEMCACHED_CON:-1024}

exec memcached -m $mem -c $conn

If we rebuild the container and launch it will by default start with a 64MB memory usage and 1024 connections, but we can also force other values:

$ docker run -d -m=130m -e MEMCACHED_MEM=128 -e MEMCACHED_CON=512 127.0.0.1:5000/ubuntu/memcached:latest 9215e32d9bee4c632623b9d4cfff226f260069c28f94307989788e48c8fbf9a9

A simple ps command confirm the variables handling.

\_ lxc-start -n 9215e32d9bee4c632623b9d4cfff226f260069c28f94307989788e48c8fbf9a9 -f /var/lib/docker/containers/9215e32d9bee4c632623b9d4cfff226f260069c28f94307989788e48c8fbf9a9 \_ memcached -m 128 -c 512

Applications that can natively rely on environment variables get reconfigured easily this way. For others it might be a little trickier:

# by default, start 4 workers unless overriden by the environment variable

workers=${NGINX_WORKERS:-4}

exec /usr/sbin/nginx -g "worker_processes $workers;"

Inter-container communications

Topology-dependent variables must be injected into containers as soon as we try to make them communicate with each-other. Docker links feature simply set environment variables to provide such information.

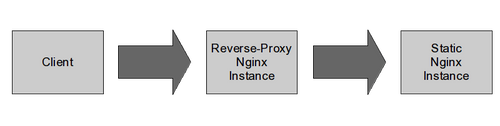

In the following example, we'll consider two nginx instances, one acting as a static content provider (web1), one acting as a reverse-proxy (rp1) in front of he first one:

Consider that we have two Docker images providing both roles: nginx:latest and nginx/rp:latest. We won't get into much details about how the containers are built, this is not the point.

Let's start the first static Nginx whose configuration is stand-alone, and name the running container web1:

$ docker run -d -name=web1 127.0.0.1:5000/nginx:latest 3932c46c8ad522cae11f7fd942e488379d4f6a04801f51eb9435fc5c56e8272e

Now let's start a simple bash into the reverse-proxy nginx and linking it to web1:

$ docker run -link=web1:web -t -i 127.0.0.1:5000/nginx/rp:latest /bin/bash daemon@eefeee437402:/$ export | grep WEB declare -x WEB_ENV_NGINX_WORKERS="1" declare -x WEB_NAME="/insane_fermi/web" declare -x WEB_PORT="tcp://172.17.0.28:80" declare -x WEB_PORT_80_TCP="tcp://172.17.0.28:80" declare -x WEB_PORT_80_TCP_ADDR="172.17.0.28" declare -x WEB_PORT_80_TCP_PORT="80" declare -x WEB_PORT_80_TCP_PROTO="tcp"

All WEB_PORT_ variables can be used to configure our upstream to point to web1 nginx instance. Unfortunately, Nginx upstream configuration can't be easily based on environment variables, this is the worst case scenario, and we will have to play with sed:

# Tweak a env variable to help templating a NGinx configuration file by removing the tcp:// prefix

NGINX_UPSTREAM_URL=${WEB_PORT#tcp://}

# use a sed to create the final site.conf NGinx configuration file

sed -e "s/##NGINX_UPSTREAM_URL##/$NGINX_UPSTREAM_URL/g" /site.conf.tpl > /etc/nginx/sites-enabled/site.conf

with a template file site.conf.tpl which might look like:

upstream upstream_server {

server ##NGINX_UPSTREAM_URL##;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://upstream_server;

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

proxy_redirect off;

proxy_buffering off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

We simply have to start the second nginx instance and publicly expose its HTTP port:

$ docker run -d -link=web1:web -name=rp1 -p=80:80 127.0.0.1:5000/nginx/rp:latest 50dfbb2e9979eb2a9cf499a22e42172339c5e8f9138cb69f0d97ea4ec31fbd2a

Wonderful, we now have a working chain. However two concerns remain:

- On a multi-host toplogy (container running onto several physical or virtual hosts), the link feature won't apply. Fortunately, if you can provide those exact same environment variables by other means, you will be able to make things work

- What happens if you stop, destroy and recreate web1? Docker will allocate a new container which will unlikely have the same IP address: failure! Your environments variables used to start the reverse-proxy point to nothing interesting, you have to restart all the depending containers.

Tools like fig or MaestroNG have been written on top of Docker to help you handle such situations, MaestroNG being able to handle multi-hosts design. If you want to add even more control over the configuration and orchestration of your Docker containers, you still can code on top of frameworks like capistrano to do this.

Conclusion

Pros:

- Docker is doing well with cross-container communications by using env variables.

- Some real simple topology management (links) can provide a static way of managing links.

Cons:

- On a larger scale, or in complicated toplogies, with lots of links and containers, it might make sense to use some orchestration tools on top of Docker to provide some high-level deployment management (rolling deployment, zero-downtime deployment...).

- In very dynamic and elastic architectures, the use of distributed services registry such as Zookeeper, etcd or serf must be considered.