Discovering CoreOS

Isn't there a more suitable OS for VMs in a Cloud environment ?

An OS on a VM is usually treated the old way: install your favorite OS, make an image of it, clone that image.

OS update ? Package management? Application deployment ? As usual, as before : use your favorite package manager and app deployer, and orchestrate them with your favorite orchestrator.

But VMs in cloud are not as your good old servers : they are many, they are volatile, and there is Docker and such. You feel that your old tools are less suited for those particular OS running in datacenters, especially if you want them to be scalable.

And then comes CoreOS.

Based on Chrome OS, the first image was released on 07/25/2013. It aims to be an OS that runs on data centers, hosting Docker containers. Indeed, CoreOS is specialized for that task.

Here are the promises :

- Minimalistic : the OS is tailored to run only needed software for running containers, which will host your applications.

- Reliable : we'll talk about that later, but let's say for now that update is done automatically.

You can run CoreOS on any virtualisation platform and cloud provider you can think about. Forget iso images, all is done through network. The documentation will guide you through install process.

The good

Install is easy, and you ends up with a running OS in no time.

Looking at ps output :

1 ? Ss 0:01 /usr/lib/systemd/systemd --switched-root --system --deserialize 19

372 ? Ss 0:00 /usr/lib/systemd/systemd-journald

398 ? Ss 0:00 /usr/lib/systemd/systemd-udevd

449 ? Ss 0:00 /usr/lib/systemd/systemd-logind

453 ? Ss 0:00 /usr/sbin/ntpd -g -n -u ntp:ntp -f /var/lib/ntp/ntp.drift

460 ? Ss 0:00 /usr/bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

473 ? Rsl 0:14 /usr/sbin/update_engine -foreground -logtostderr -no_connection_manager

478 ? Ss 0:00 /usr/lib/systemd/systemd-resolved

510 ? Ssl 0:00 /usr/lib/locksmith/locksmithd

554 ? Ssl 0:00 /usr/bin/etcd

555 tty1 Ss+ 0:00 /sbin/agetty --noclear tty1 linux

559 ? Ssl 0:03 /usr/bin/fleetd

661 ? Ss 0:00 /usr/lib/systemd/systemd-networkd

As said before, CoreOS is about Cloud and managing a large fleet of servers. As such, one VM is not enough, and you are encouraged to run at least three. But what the need of three VM, even shipped with Docker? It seems like I have ... three VM running independently, as I had when I installed Ubuntu, Centos or such.

And here comes the strengths of CoreOS : etcd and fleet.

Both are developed by the CoreOS team, but can be compiled and used on other Linux distribution.

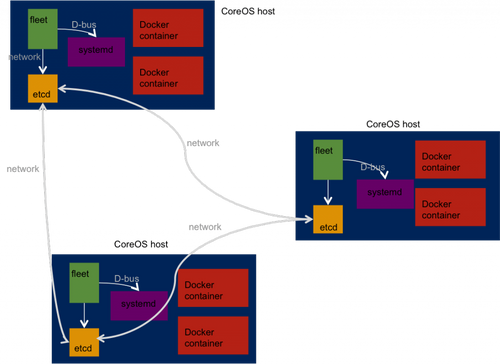

Here is a sneak peak of the CoreOS architecture :

etcd

etcd is a distributed, resilient and highly available (through Raft consensus) key-value store. Its goal is to share data between all instances of CoreOS, provided that they run etcd. Posting and retrieving data to etcd is just a matter of using the RESTful API or one of its clients. Note that etcd runs on host, not within a container.

It has nice features, such as : TTL on entry or directory, waiting for an entry to change and history of those changes, key ordering to simulate a queue, hidden directories, statistics on etcd cluster or node, and more.

With such a thing, it becomes possible to share configuration across all hosts, without the need of a third party software.

curl -X PUT -L http://127.0.0.1:4001/v2/keys/hello -d value="world"

{"action":"set","node":{"key":"/hello","value":"world","modifiedIndex":9664,"createdIndex":9664}}

core@core-03 ~ $ curl -L http://127.0.0.1:4001/v2/keys/hello

{"action":"get","node":{"key":"/hello","value":"world","modifiedIndex":9513,"createdIndex":9513}}

But you can also use etcdctl :

core@core-03 ~ $ etcdctl set /hello you

you

core@core-02 ~ $ etcdctl get /hello

you

Okay, now I have hosts sharing data. I have Docker containers that can run on CoreOS hosts. But they are quite "stuck" to these host, don't you feel? CoreOS seems versatile to me, I can install it everywhere in a snap but why are my Docker containers so stuck to a VM?

fleet

fleet controls the systemd daemon of CoreOS instances via d-bus, and is controlled via sockets (Unix or network). It uses etcd (default to localhost) to communicate with others fleet daemon on other CoreOS hosts.

Systemd is an alternative to the System V init daemon, and does much more. Describing it is outside of the scope of this article, but remember that it replaces bunch of shell scripts by "configuration" files, called Units.

Fleet helps you deploy units (and docker containers could be units) given several distribution strategies:

- Some units can be spread over every fleet-enabled hosts by the etcd cluster (they are named global units)

- Some rules can prevent two units from running on the same host (can make sense for H/A)

- Some rules enforce that two units must be running on the same host

- Some units can only be run on specific tagged hosts. Each fleed daemon can be tagged with several labels to help categorize them, given functional or geographic criteria (metadata). Those tags can be injected by cloud-config.

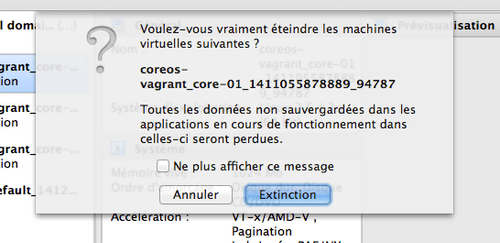

Basically, when you do nothing specific, if you ask fleet to run an unit, it runs on the CoreOS you are on. Power off (or destroy) that host and the unit (a.k.a your service) will be ran on another host without explicit scheduling maneuver on our side.

Of course, you can know where the units are.

Lets introspect our fleet cluster :

core@core-01 ~ $ fleetctl list-machines

MACHINE IP METADATA

1aaab8e5... 172.17.8.102 -

379ba5b7... 172.17.8.101 -

57d0cc63... 172.17.8.103 -

Here is my unit file, this is the example provided in CoreOS doc:

core@core-01 ~ $ cat m.service

[Unit]

Description=MyApp

After=docker.service

Requires=docker.service

[Service]

TimeoutStartSec=0

ExecStartPre=-/usr/bin/docker kill busybox1

ExecStartPre=-/usr/bin/docker rm busybox1

ExecStartPre=/usr/bin/docker pull busybox

ExecStart=/usr/bin/docker run --name busybox1 busybox /bin/sh -c "while true; do echo Hello World; sleep 1; done"

ExecStop=/usr/bin/docker stop busybox1

Let's start it:

fleet start m.service

Let's see where it runs:

core@core-01 ~ $ fleetctl list-unit-files

UNIT HASH DSTATE STATE TARGET

m.service 391d247 launched launched 379ba5b7.../172.17.8.101

172.17.8.101 is the IP of the host I'm on.

Let's kill it:

Wait a bit, then:

core@core-02 ~ $ fleetctl list-unit-files

UNIT HASH DSTATE STATE TARGET

m.service 391d247 launched launched 1aaab8e5.../172.17.8.102

My service has migrated !

//www.youtube.com/embed/D0n8N98mpes

But not its data. For this, you can check out flocker, dedicated to data migration in container environments.

Okay, my containers are moving, I can play with them (suddenly, I want to put CoreOS on dozens of Raspberry Pi ...), but what can I do with the host?

Automatic update

Short answer : nothing else.

Long answer : and this is not the goal of CoreOS. CoreOS is for you to play with containers. The CoreOS team wants you to concentrate on your containers, and they deal with the host. You don't have man pages, nor package managers. You feel powerless, don't you?

Actually, there is exactly what you need, no more, no less, and update is made automatically and transparently. All the bricks are here for that : you play with containers, CoreOS handle everything else. You just give it a few hints : reboot as the update is made, reboot all hosts one by one, don't reboot. Remember that your containers are being moved automatically be fleet (provided you use fleet).

OS updates are made by swapping between an active and a passive partition : the update is made on the passive one. On reboot, this is the one used.

The doubts

What if all your CoreOS don't have access to Internet, as in a private cloud ? Could it be updated ? Could it even work?

As CoreOS schedules updates automatically, do you have to get rid of Chef / Puppet?

I also feel uncomfortable that a company owns my Linux distribution, especially when this company makes the update by itself. What if the company fails? Or the repos are hacked? Even if updates are said to be signed, I don't like the idea of depending on a single entity for my updates. It remembers me the questions and fears I had about RHN, which, with time, are not justified anymore...

What CoreOS corp provides? As a company, what can I expect from CoreOS? I'll dive into those questions in a future article.