Use Azure Blob Storage in your application

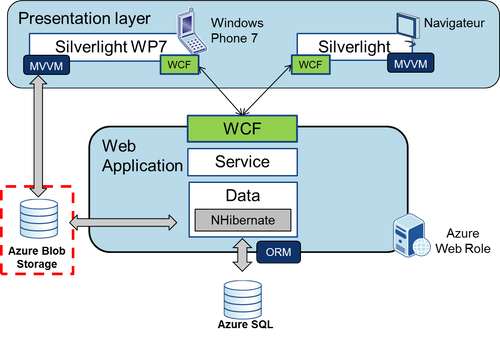

This article will describe how to make use of Azure Blob Storage in order to add an "electronic vault" functionality to your application. This post follows the first one which made the introduction to Azure and described how to deploy existing application to Azure. Let's recall the architecture of the application and see the changes which will be made while adding the connection to Azure Blob Storage.

The application is already deployed to Azure, it is hosted by a Web Role and uses Azure SQL as its main data storage. Azure Blob Storage will be added to the application in order to create an "Electronic vault" for each user. Both the client as well as the server will have the possibility to interact with the Blob Storage.

Electronic vault

The electronic vault allows the user to perform the following:

- Upload, download and remove files in the vault

- Generate files and store them in the electronic vault (such as transactions or account overviews)

In the mean time, the Vault should store metadata for each document indicating the time of the upload and the initiatior of the upload (or modification).

Azure Blob Storage

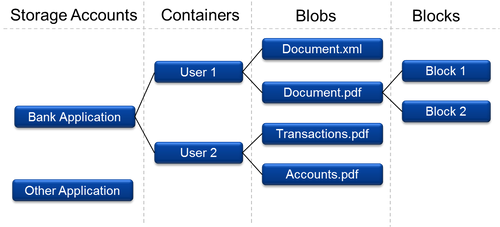

Before we describe how exactly the electronic vault can be implemented, lets take a look at the architecture and possible usage of Azure Blob Storage. Each Azure Storage account can have several containers. In each container we can have several blobs and each blob can be composed of several blocks. The following diagram shows the structure of Azure Blob Storage the way it can be used to create a electronic vault for each user.

Each user will possess one container, which will allow us to assign concrete rights to each container. Note that there is no notion of folders inside of each container, but there is a special naming convention which allows overcoming this issue.

As you can see from the diagram, each file can be separated into several blocks. This is especially useful when treating large files. When file is separated into blocks, than the upload has three phases: first the list of block ids is sent, than each block is uploaded separately and at least a commit is send to the server. When some of the uploads fails, server can easily send the list of missing blocks to the client. The same applies also to download operation. This separation also allows parallel upload by several clients.

Architecture of Azure Storage

All blobs are stored in a distributed file system in Azure fabric. However each blob belongs to one exact partition server. Each blob has a unique key which is composed of its container name and blob name. This key is used as partitioning key, which assigns the blob to one partition server. The access to each partition server is load-balanced and all the partition servers use a common distributed file system. Because each blob has a unique partition key, than in fact the access to each blob is load-balanced. In a blog post, which dates May 2010, the Azure Storage Team stated, that the targeted throughput for a single Blob was 60Mb/s. Since the aim here is not to give detail explanation of the architecture, you can refer to the Azure Storage Team blog for more details.

Access to Blob Storage - APIs

We can interact with Azure Blob storage using two possible API’s.

- .NET API

- REST API

.NET API is a set of classes which can be used on the server side to access the storage. The following snippet shows, how to upload a file to a Blob container.

var storageAccount = CloudStorageAccount.FromConfigurationSetting("connStr");

var blobClient = storageAccount.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("container");

container.CreateIfNotExist();

var blob = blobContainer.GetBlobReference("fileName");

blob.UploadByteArray(data);

You can see, that the code is quite straightforward. Note that the access key is part of the connection string which is passed to FromConfigurationSetting method.

This API is not accessible for Silverlight clients. Thus if we want the Silverlight client to have a possibility to access the storage we have two options: Expose the calls to API by WCF services or use the REST API.

REST API is completely platform independent and uses HTTP as its transport protocol to execute actions, upload and download data from the storage. The creation of the HTTP request depends on the platform of the client. In C# we can use the following code:

public void UploadFile(Uri url){

var webRequest = (HttpWebRequest)WebRequestCreator.ClientHttp.Create(url);

webRequest.Method = "PUT";

webRequest.Headers["x-ms-meta-comment"] = "my comment";

webRequest.Headers["x-ms-meta-author"] = "current user";

webRequest.BeginGetRequestStream(new AsyncCallback(WriteData), webRequest);

}

private void WriteData(IAsyncResult result)

{

var webRequest = (HttpWebRequest)asynchronousResult.AsyncState;

var requestStream = webRequest.EndGetRequestStream(asynchronousResult);

//Write the binary data to the stream

webRequest.BeginGetResponse(new AsyncCallback(UploadFinish), webRequest);

}

private void UploadFinished(IAsyncResult result)

{

if(result.IsCompleted){

//Check the response

}

}

Note that the metadata of the file (comments, authors) are added as headers to the HTTP Request. To get more details on the REST API, you can visit the official MSDN reference page.

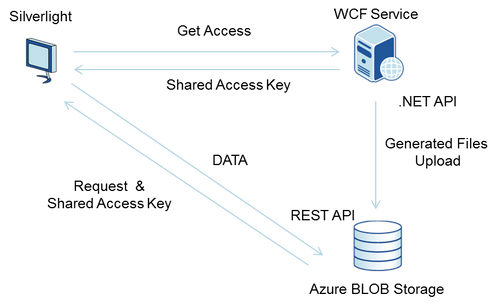

In our banking application, we use both APIs. To give the Silverlight client direct access to the storage we will use REST API. This will also avoid passing of all the data through WCF Services and take significant data load of our servers (or Web Roles). In case of generation of documents (such as transaction overviews), the documents will be generated and inserted to the vault using the server side API.

Security Issues

The access to Azure storage is protected by “storage access key” (Composed of 88 ASCII characters). Every storage account is provided with two keys (called simply "Primary" and "Secondary") in order to enable renewing of the access keys and keeping zero down time (both of the keys allows access to the storage at the same time). The access key allows the access to the Azure Storage. When using the .NET API, the key is just provided to the API which internally uses the key to authenticate the calls to Azure. When using the REST API, each request has to be signed by the key. On the server side we can use the .NET API access the storage - the server has the key stored in it's configuration files. However if Silverlight wants to talk directly to Azure Storage, it will need the key to sign all REST requests, so we would need to give the key to the Silverlight application. And that is the potential security problem. We cannot give the access key away to all Silverlight clients. Silverlight as a client is just compiled XAP package, which could be reverse engineered to obtain the key in the case of the access key hard coded inside the package. So how to allow the client application to directly access the Blob storage without giving the key to the Silverlight client application ?

One option would be to build WCF service (secured by SSL) which Silverlight could ask to obtain the access key after performing authentication against the server. However this way the key would be handed to all the clients and once the attacker would infiltrate only one client machine he would also obtain non-restricted access to the whole storage account. The second option which resolves this issue is to use Shared Access Signatures.

Shared Access Signatures

Shared Access Signature (SAS) is a temporal authentication token which enables access to concrete blob (or whole container).This way when the client desires to access the Blob storage, he will first contact the server and ask for Shared Access Signature. Server knowing the right information (the demanded blob or container) and being sure that the client is identified will generate the token. This token is then sent to the client using secured channel (such as WCF service secured by SSL). The client can than add this token to its upload or download request which will permit him to access the demanded files. Of course there is still the possibility for an attacker to obtain the Shared Access Signature, however the potential danger is much lower for two reasons:

- As said before Shared Access Signature is a temporal token

- The attacker would obtain the access just to one container or blob, not the access key which can be used to sign all requests.

That being said, here is the diagram showing the communication between the client, server and the Blob storage.

Generating Shared Access Signature

Shared Access Signature is a Message Authentication Code, standard term in cryptography describing short piece of information which authenticates a message. It is generated using standard HMAC (Hash-base MAC) algorithm. HMAC algorithms use one of the existing hash functions in combination with the secret key to generate the MAC value. Azure Blob storage uses the HMACSHA256 function, which as the name says, makes use of 256bit SHA function and has the following definition (where "|" represents concatenation):

HMACSHA256 (key, data) = sha256(sha256(data|key)|key)

The hash is applied two times during the process in order to disable some simple attacks on previously simpler variants of the function which used just hashed combination of data and key. More information can be found on the wikipedia page.

The key is simply the Blob storage access key however the data is the combination of the following:

- Identification of resource which should be accessed (container or blob)

- Time stamp - specifying the expiration date

- Permissions which will be give to access the resource

The same data is added to the request, so when the server receives the request, it simply performs the same calculation of the HMAC value to verify the Shared Access Signature. That is possible because the server is in possession of the access key.

When we are generating the signature on the server side, we can simply use the .NET API, which provides required methods. The following snippet illustrates the generation of Shared Access Signature, which will give the user read, write and delete rights for the next 10 minutes.

var container = blobClient.GetContainerReference("container1");

var permissions = new BlobContainerPermissions();

permissions.PublicAccess = BlobContainerPublicAccessType.Off;

container.SetPermissions(permissions);

var expDate = DateTime.UtcNow + TimeSpan.FromMinutes(10);

var sas = container.GetSharedAccessSignature(new SharedAccessPolicy()

{

Permissions = SharedAccessPermissions.Read | SharedAccessPermissions.Write

| SharedAccessPermissions.Delete | SharedAccessPermissions.List,

SharedAccessExpiryTime = expDate

});

Conclusion

As you could see in the second part, it is quite easy to add a new functionality using Azure Blob storage, which allows your client to upload the data directly to the server without loosing the security of the application.

We can achieve the same functionality storing the files in SQL Server, so the question which comes in mind is what are the advantages which Blob Storage has over SQL Server (or SQL Azure). Here is the list of points which might explain why to use Azure Blob Storage.

- Blob Storage offers build-in support for the metadata for each file

- Blob Storage has the ability of separating the files into blocks and thus provides better support for treatment of large files.

- The architecture of Blob Storage allows the access to each blob to be load-balanced and thus provides high access speed (See details here). However due to the dependence on network connection this is difficult to compare with SQL Server on premise or Azure SQL and no metrics have been published by Microsoft so far.

When comparing Azure SQL with Azure Blob Storage:

- Blob storage will be probably much cheaper. Note that this will depend on concrete cases, while the pricing scheme is not the same. You can get more details on the official site.

- Blob Storage has a limit of 2000GB whereas 1 database of Azure SQL is limited to 100GB

Of course the choice will depend on the type and the scope of the application but Blob Storage is definitely one of the options to be considered when designing new .NET application.