The Test Pyramid In Practice (1/5)

If you read this blog or our publications, you know how much testing is tied to software quality and, if I may say so, to software success. I insist on this point because all too often our customers treat tests as the fifth wheel when it comes to development. You know the consequences: an astronomical number of design anomalies, pernicious bugs in production and, worse still, software which ossifies little by little.

This article is the first in a series, and will mostly address theory. In the following articles we will delve into practice, applied to Java and Spring.

This article originally appeared on our French Language Blog on 25/06/2018.

Foreword

Sometimes we decide to test and we manage to convince the "upper echelons" of the benefit and the need to take time (and therefore money) testing, but:

- "We won't make it with unit tests, our code is too complex/coupled/etc."

- "Integration testing is complicated to set up, so we may as well deploy the entire application."

- "The best thing would be to have tests that emulate to what our user does! And it'll be easier to test."

And then we deploy the heavy artillery: end-to-end tests, usually Selenium-type graphical interface tests:

- "This’ll be great! We’ll validate the whole application in a few tests, and we’ll even be able to show the reports to the business and the testers if we put it in Cucumber or Serenity. They're gonna love us ????."

But, after a few weeks or months we begin to realize that this may not have been the best idea:

- The tests are slow: "4 hours for 150 tests",

- They fail intermittently: "Why is it red? Probably Selenium, restart it to check...",

- Even worse, standing up a complete testing environment with stable datasets is a headache at best and impossible at worst

Then we invest even more because we say to ourselves that we aren’t missing much, and it’d be a shame to throw everything away now. And yes, things can be improved, but at what cost? And for how long? Overall, the "black box" testing strategy is neither the most effective nor the most cost-effective.

I can see more than one grin forming as you reading this, but rest assured: you are not alone.

Testing Strategy

Before I tell you more about the Test Pyramid, let’s recall some criteria that are important to consider when thinking about a testing strategy. And, in order to resolve an all too common misunderstanding, in this article we’ll exclusively talk about automated tests.

Feedback

A test, whatever it is, has no other purpose than to give you the feedback: "Is my program doing what it is supposed to?" We can judge the quality of this feedback by three criteria:

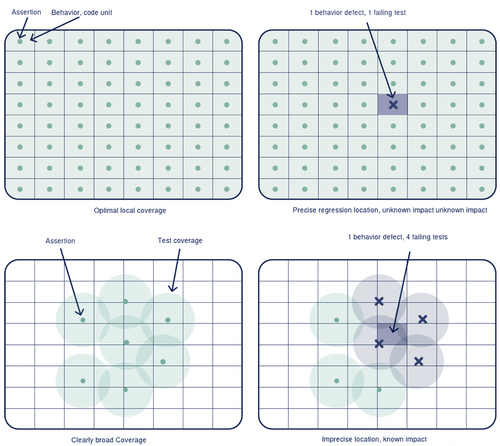

- The accuracy of feedback. If a test fails, can we determine exactly which piece of code does not work? How long does it take for a developer to identify the piece of code that fails the test? The more granular a test is (at the method level), the more accurate the feedback will be. On the other hand, how do you know if a database access or a JavaScript error causes an end-to-end test to fail?

Source : Culture Code, OCTO Technology

- Reliable feedback. The repeatability of a test is paramount. Can we trust a test whose results vary from one execution to another, without any apparent modification to the code, configuration or dependencies? Once again, end-to-end tests have an unfortunate tendency to explode for obscure and often uncontrollable reasons: network latency, a garbage collection event that slows down the JVM, a misbehaving browser, a deactivated account, a modified database schema etc. LinkedIn engineers concluded that unstable tests are worse than no tests after calculating that they had a 13.4% chance of having a stable build with 200 tests and a 1% probability of failure on each test.

"Stop calling your build flaky. Have you ever released to production when, say, your search functionality “sometimes works”?"

- Speed of feedback. Many of us had yet to be born when punch-cards were used for programming, the glorious, but thankfully past, era when it took hours or even days to know if a stack of cards "compiled" and then start over when it didn’t. It’s no longer acceptable to wait so long before knowing if our code compiles (it’s nearly instantaneous in the IDE), and the same is true for tests: the sooner you know if a test fails, the sooner you can identify the problem, and the cheaper it will be to fix :

Source : Code complete, 2nd edition, by Steve McConnell ^<a id="post-5-footnote-ref-2" href="#post-5-footnote-2">[2]</a>^

There’s even a virtuous cycle when the tests are fast: they’re easy to run^<a id="post-5-footnote-ref-3" href="#post-5-footnote-3">[3]</a>^ and build confidence in your code, prompting you to write even more.

A good testing strategy therefore aims to maximize the number of tests that meet these three criteria: accuracy, speed, and reliability.

Ease of creation and maintenance cost

Knowing that you’re unlikely to have an infinite budget, your testing strategy will necessarily depend on it. You need to weigh the cost of different types of test against the value they provide, in other words evaluate their ROI.

- Unit tests are usually very simple to implement, provided you don’t wait until the code is crippled by technical debt. Because they operate in isolation, the environment is relatively simple to set up via stubs or mocks. Because they are very close to the code, it’s natural to maintain unit tests by refactoring.

- Integration tests are quite simple too (here we’re talking about integration tests within an application, not between applications). We overcome the major constraints imposed by end-to-end tests (i.e. the presentation layer and some dependencies). They’re also fairly close to the code which simplifies refactoring. However they cover a broader spectrum of code and as a result are more likely to be impacted by changes to the application code.

- End-to-end or GUI testing is more complex to implement because it requires a complete environment to be deployed. The presence of dependencies and datasets form a puzzle, complicated by instabilities due to environment reloads, changes by other teams, etc.These complications make this type of test very fragile and introduce maintenance costs in the form of build error analysis and the maintenance of the environment and data.

The following table summarizes the main criteria for choosing the type of test to be used:

From this perspective, we might say "Great! I only have to do unit tests", but other types of tests exist for a good reason: unit tests can’t validate everything.

The Testing Pyramid

We now come to the famous Testing Pyramid, first described by Mike Cohn in his book Succeeding with Agile, which helps immensely when defining a testing strategy.

- We invest heavily in unit tests which provide a solid foundation for the pyramid by giving us fast and accurate feedback. Paired with continuous integration, unit tests provide a safeguard against regressions, which is essential if we want to control our product in the medium to long term.

- At the top of the pyramid, we reduce our end-to-end testing to a minimum: for example validating the component’s integration into the overall system, any homemade graphic components, and possibly some smoke tests as acceptance tests.

- And, in the middle of the pyramid, integration tests allow us to validate the component (internal integration) and its boundaries (external integration). The principle is again to favor internal component tests which are isolated from the rest of the system, over external tests which are more complex to implement:

This wraps up our discussion of the theory. In the next article we’ll deal in depth more with the base of the pyramid: unit testing and putting it into practice on a Java / Spring project.